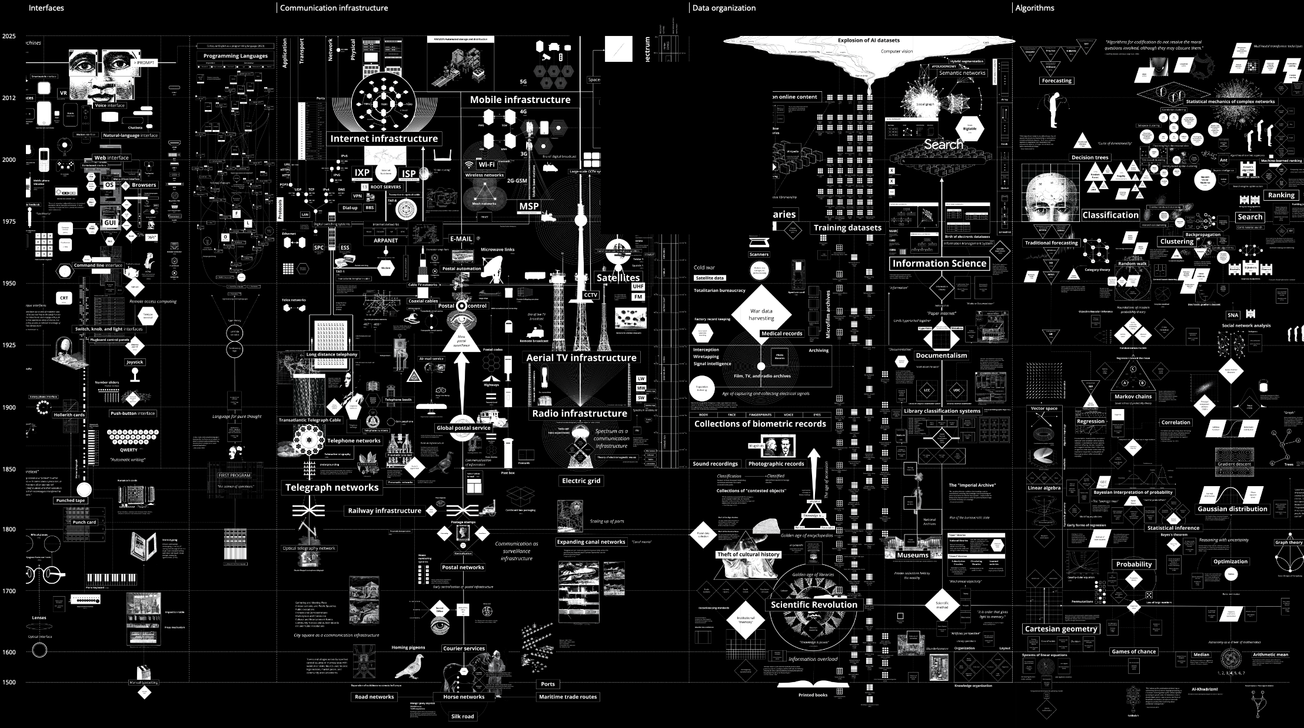

The datafication of human life — the transformation of daily activities into quantifiable data through pervasive computing — is reshaping how we live. From fitness trackers and smart home devices to location-aware apps and biometric scanners, an ever-growing network of sensors, wearables, and connected devices is turning our movements, choices, and interactions into actionable datasets.

On the optimistic side, this information can offer unprecedented insight into our behaviors, preferences, and habits. It enables more informed decisions about health, finances, and lifestyle. For example, wearable health monitors can detect anomalies early, and financial platforms can help optimize spending habits through predictive analytics.

But the same ubiquitous data collection that fuels convenience also amplifies risks. Governments and corporations can use personal data for targeted advertising, political profiling, and algorithmic decision-making — often without explicit consent. Surveillance infrastructure, once deployed, rarely contracts; instead, it becomes embedded in both civic and commercial systems.

The Human-Centered Lens on Datafication

A humanistic approach to data ethics requires that privacy, autonomy, and transparency be embedded into the design of data-driven systems. Yet significant barriers persist, including limited public understanding of the long-term implications of datafication, a lack of transparency about who can access personal data and how it is used, and inadequate legal and regulatory protections for user rights across borders. Without international data protection standards, individuals remain exposed to potential misuse of their information. Addressing these gaps demands a robust global framework that incorporates interoperable privacy laws, transparent consent mechanisms, and clearly defined data ownership rights.

The Transparency Problem

Among all barriers, transparency is the most elusive. Individuals often don’t know when they are being tracked, what data is collected, or how it will be used in the future. Even when privacy controls exist, they are buried in opaque terms of service or fragmented settings that place the burden on users. Making privacy literacy more accessible could empower people to make informed choices about their data. Without it, the asymmetry between those who generate data and those who control it will continue to grow.

Reclaiming Control

If individuals could fully own and manage their data, the possibilities for equitable and inclusive societies expand. Data sovereignty could enable communities to dictate the terms of their participation in digital ecosystems — deciding not only what to share, but with whom, and for what purpose.

Such a shift would require a paradigm change in how data rights are recognized, enforced, and monetized. Until then, the datafication of the lived human experience remains a double-edged sword — offering both empowerment and exploitation, depending on who holds the keys.