The standard camera is stuck in time. Fixed frame rates, static sensors, and megapixel battles have dominated visual culture for decades. But a new imaging paradigm is quietly changing how machines—and now artists—capture the world. Neuromorphic event cameras don’t record frames. They don’t even shoot video in the conventional sense. Instead, they capture change itself—logging individual pixel-level brightness shifts as they occur, asynchronously, in real time. Built to mimic the retina’s dynamic response to movement, these sensors have been used in robotics, drones, and industrial automation. But a growing number of creative technologists and media labs are now exploring how neuromorphic vision can be used to reframe motion, perception, and time.

What Is a Neuromorphic Event Camera?

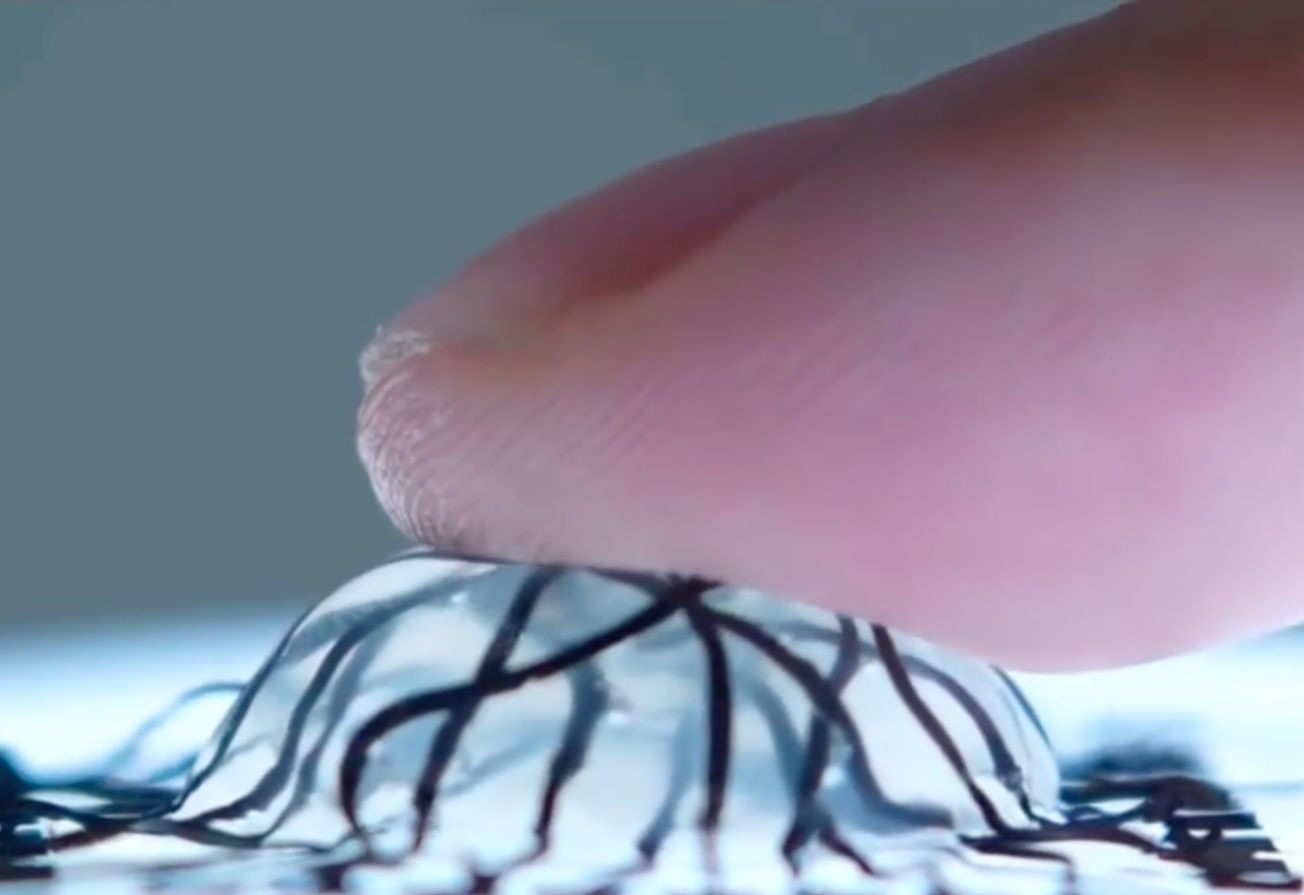

Unlike traditional cameras that capture scenes frame by frame, event cameras operate on a different logic. They use asynchronous pixels that respond to local changes in brightness, each firing independently when a threshold is crossed. The result is a stream of timestamped “events” indicating that a change occurred at a specific pixel, at a specific time.

This format allows for:

• Ultra-low latency (microsecond scale)

• High dynamic range (HDR > 120 dB)

• Low power consumption

• No motion blur

Instead of flattening time into a sequence of fixed images, event cameras produce a fluid, responsive data set that can be analyzed or visualized in new ways.

Two leaders in this space are:

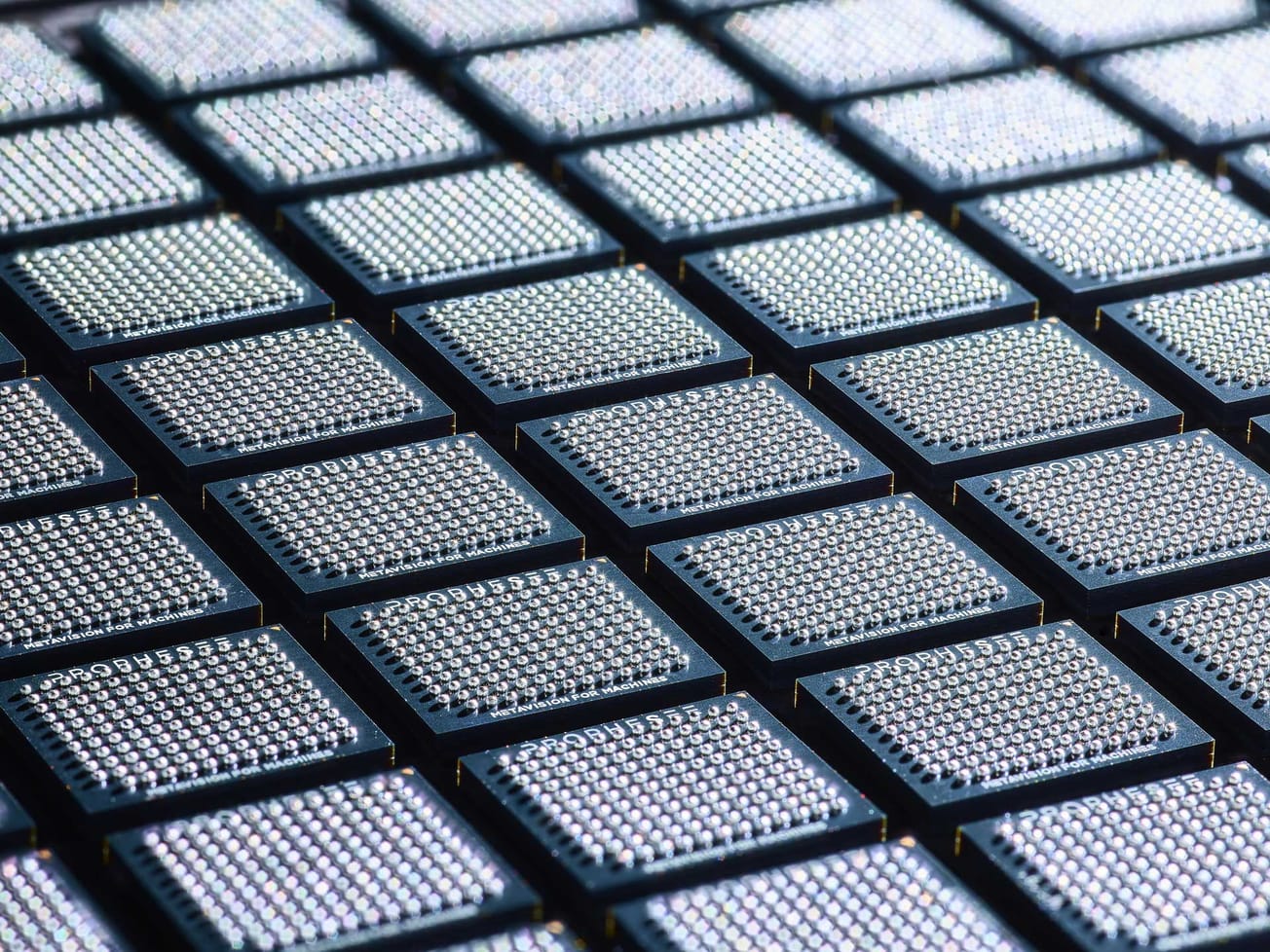

• Prophesee: a French startup whose Metavision sensor powers a growing ecosystem of industrial and research tools.

• iniVation: a Swiss company offering high-resolution event-based sensors, such as the DVXplorer and DAVIS346.

Scientific Validation, Creative Implications

Neuromorphic sensors have rapidly moved from laboratory novelty to field-tested instruments. In research published by Piatkowska et al. (2022) in IEEE Transactions on Pattern Analysis and Machine Intelligence, event cameras demonstrated superior capabilities in object tracking and motion analysis under challenging lighting conditions. Their asynchronous design allows for the capture of fast and subtle movements that traditional cameras often miss.

This shift from frame capture to event prioritization could prove transformative for artists and designers working with time-based media, motion tracking, or responsive systems. As Professor Tobi Delbruck—one of the pioneers of neuromorphic vision at ETH Zurich—noted: "Event-based vision allows us to represent what’s meaningful: change itself, not the redundant static."

Image Credit: Coin spinning at 750 rpm. Playback recording in jAER, 100x slower than real time, each rendered frame showing the events produced in 300 us. Video by Sim Bamford.

Real-World Artistic Applications

- Dynamic Motion Capture at ETH Zurich

At ETH Zurich’s Institute of Neuroinformatics, researchers led by Tobi Delbruck have experimented with using event cameras for high-speed human motion capture. In one pilot project, event-based sensors captured detailed joint movements at high temporal resolution, opening possibilities for dancers, choreographers, and VR developers to map motion with extraordinary precision, even in low light or fast-action scenarios where conventional cameras struggle. - Prophesee x Sony Semiconductor Collaboration

In 2023, Prophesee and Sony Semiconductor Solutions announced the IMX636 neuromorphic sensor, which combines event-based vision with traditional frame capture. Through academic access programs, institutions like MIT Media Lab and EPFL’s Laboratory of Movement Analysis and Measurement have begun integrating these sensors into creative research, from XR applications to live performance analysis and responsive installation design.

For creators working with hybrid realities, this dual-sensing capability enables real-time blending of gestural input and computational imagery with minimal latency.

Open Source and Educational Access

One major barrier to artistic use of event cameras has been access and tooling. Most hardware is built for engineers, not artists. But that’s beginning to change:

• The open-source jAER (Java Address-Event Representation) platform allows users to visualize and manipulate event data in real time.

• ESIM (Event Simulator) lets creatives prototype interactions using simulated event streams based on virtual scenes.

• Workshops and experimental courses are emerging at institutions like Carnegie Mellon University, RMIT in Australia, and ECAL in Switzerland, where students explore neuromorphic vision as a medium for responsive art, architecture, and interaction design.

Limitations and Learning Curve

While powerful, event cameras are not plug-and-play. The data stream is non-visual by default, meaning artists must build custom pipelines to visualize, sonify, or map the data meaningfully. Unlike RGB or depth cameras, event cameras do not capture color or intensity—only change. This makes them best suited for motion-centric experiences, gesture tracking, or low-light sensing. But for practitioners focused on embodied media, interaction, and adaptive systems, this raw data stream offers new expressive dimensions.

Toward Post-Cinematic Media

As immersive technologies evolve—from XR to spatial computing—so too must our sensing tools. Neuromorphic cameras offer a fundamentally different logic: not recording what is, but what becomes. For artists, this suggests a move away from archival image-making and toward situated, ephemeral interaction—designing systems that respond not to presence, but to transformation.

Whether powering kinetic installations, embodied interfaces, or responsive environments, event-based vision may be less about replacing the camera, and more about redefining how we perceive perception itself.