Artificial intelligence is only as good as the data it consumes. Yet the datasets driving machine learning are far from neutral—they reflect histories of exclusion, erasure, and cultural dominance. From natural language processing to facial recognition, the question of whose knowledge gets encoded is increasingly unavoidable. To decolonize the dataset is not just a technical problem; it is a cultural and political challenge that demands a rethink of authorship, representation, and participation in AI design.

Missing Data, Missing Voices

Artist and researcher Mimi Ọnụọha shows that what’s absent from datasets can be as revealing as what’s included. Her project The Library of Missing Datasets (2016–ongoing) collects examples of information that institutions routinely fail to gather—such as statistics on undocumented communities, instances of police violence, or other marginalized experiences. By naming these absences, Ọnụọha makes visible that missing data is rarely accidental; it reflects structural conditions and decisions about whose lives are counted and whose are left out.

She argues that datasets are cultural artifacts shaped by power, not neutral repositories of facts. When these omissions carry into AI training sets, they scale into systems that overlook or misrepresent entire populations, reinforcing inequities in who technologies can “see” or “hear.”

Training Machines Differently

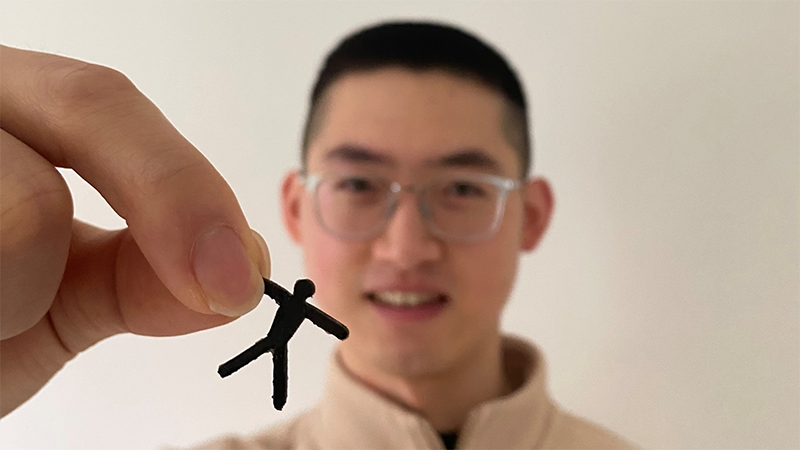

Some practitioners are reimagining what dataset authorship could look like. Stephanie Dinkins, an artist working at the intersection of AI and community organizing, is known for her long-term project Conversations with Bina48, in which she engages a humanoid robot built on the likeness and memories of a Black woman. For Dinkins, these dialogues are not only about human–machine interaction but about how cultural specificity and family narratives can shape AI. She extends this inquiry through projects such as Not The Only One—a community-based AI trained on oral histories from people of color—where local participants help generate the training data.

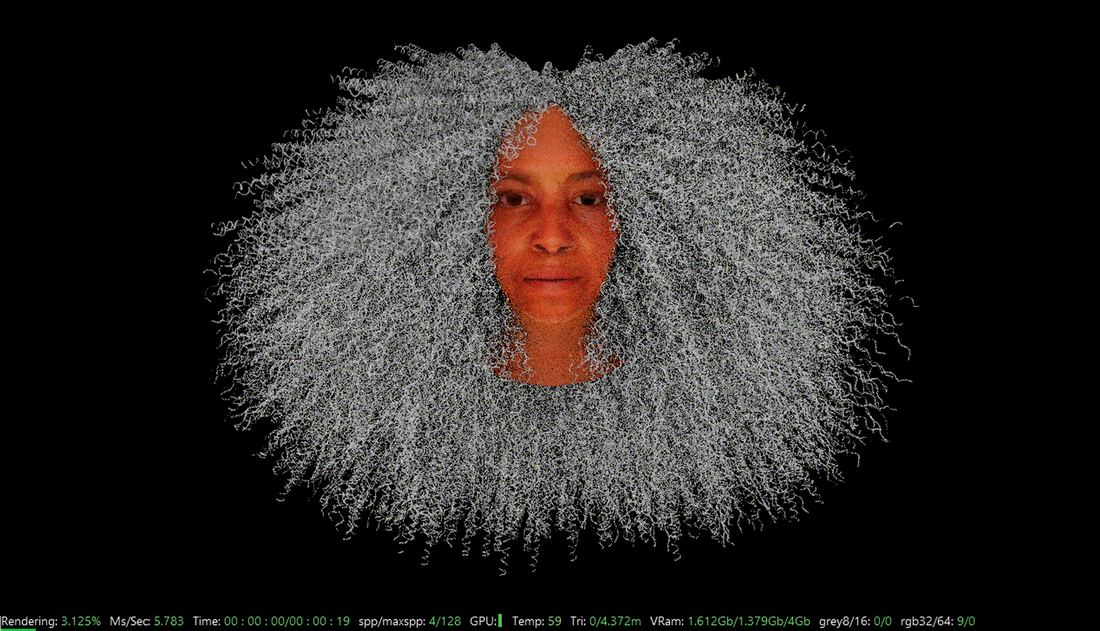

Rashaad Newsome takes a parallel approach with his AI entity Being the Digital GRIOT trained on archives of Black cultural production and critical theory. Rather than presenting AI as a universal or objective intelligence, Being performs, lectures, and interacts with audiences from within a distinct cultural lineage.

Together, these projects move beyond critique into practice, offering models for how AI might be trained through community-grounded processes. They open space for cultural knowledge that has historically been excluded from technological infrastructures.

Collective Infrastructures

Decolonizing datasets also requires rethinking how infrastructure itself is built. The Masakhane Project, a grassroots research collective across Africa, develops open-source natural language processing datasets, models, and tools for African languages. Most mainstream NLP systems privilege English and a handful of global languages, sidelining hundreds of millions of speakers. Masakhane addresses this imbalance by mobilizing a distributed network of contributors who build resources in their own languages, reshaping who participates in AI development.

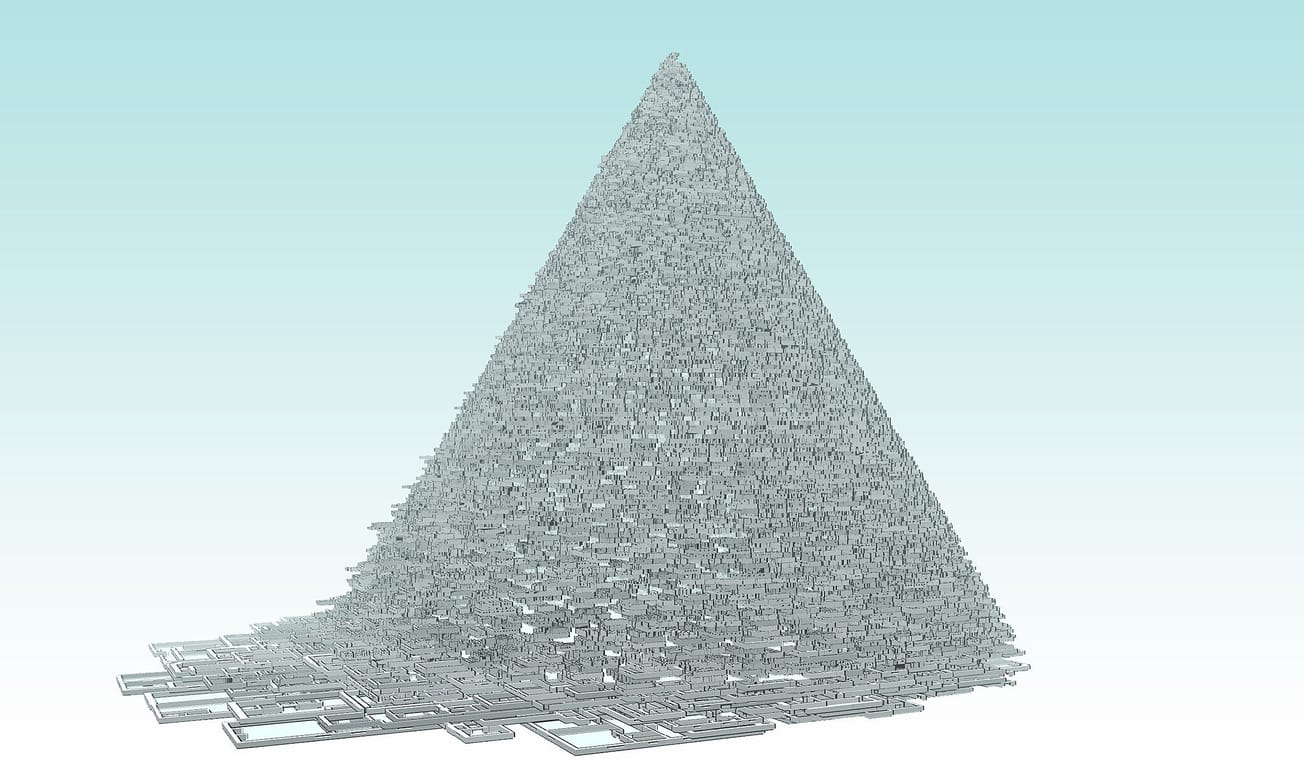

The Indigenous Protocol and AI Working Group challenges the Western epistemologies that dominate AI systems. Their work asks what it would mean to embed Indigenous worldviews into machine learning—where training is grounded in relationship rather than extraction, and knowledge is guided by protocols of respect, consent, and reciprocity. Instead of treating datasets as resources to be mined, this approach repositions them as living cultural entities. Critical scholarship reinforces these shifts. Cognitive scientist Abeba Birhane has described large-scale datasets as a form of “automated colonization,” reproducing extractive logics of empire in digital form. She advocates for relational ethics in AI, where the value of data is situated in context, community, and ongoing dialogue rather than in scale or efficiency.

In Latin America, the feminist tech collective Coding Rights addresses similar issues through activism and creative interventions. Known for projects like Chupadados, they expose how algorithmic systems perpetuate discrimination—particularly against women and marginalized groups—and link dataset politics to broader struggles around rights, surveillance, and digital governance. Together, these initiatives show that alternatives are not only possible but already in motion. They demonstrate that decolonizing AI is less about “fixing” biased systems from the outside and more about designing infrastructures that begin from different worldviews.

Beyond Inclusion

Decolonizing AI cannot be reduced to adding more data or expanding categories of representation. As many researchers note, inclusion alone risks integrating marginalized knowledge into systems that remain extractive at their core. The projects above point instead to a deeper shift: reframing what counts as data, how it is gathered, and who has agency in the process. From Ọnụọha’s mapping of absence to Dinkins’ and Newsome’s culturally specific training practices, from Masakhane’s distributed infrastructures to the Indigenous Protocol group’s epistemic reframing, the momentum is toward plurality. The future of AI is unlikely to be shaped by a single universal dataset but by many situated ones.

As Birhane and Coding Rights emphasize, the politics of datasets extend beyond technical domains. They touch on cultural survival, digital rights, and the governance of knowledge itself. The challenge is not only to demand representation but to build AI infrastructures that reflect and sustain the diversity of human experience.