Generative storytelling isn’t about automation. It’s about reconfiguration. When models write, recombine, or adapt text, stories stop being fixed scripts and start behaving like adaptive systems—shaped by both human input and machine inference.

Authorship in Flux

The first assumption generative storytelling unsettles is authorship. Who owns a story co-produced by human prompts, training data, and machine inference? Shekhar Kapur’s Warlord, a sci-fi series in development with Studio Blo, takes a collaborative approach. Built with generative AI, the project is framed as a shared-IP universe where creators—and eventually audiences—can expand the narrative. Kapur calls this democratization. But dispersing authorship raises questions: who maintains coherence, and who bears responsibility for ethics or biases encoded in the system?

Natasha Lyonne’s Uncanny Valley, developed with AI studio Asteria, pushes hybridity further. Asteria’s custom model, “Marey,” trained on licensed datasets, shaped both visuals and narrative logic. Here, AI functions not just as a production tool but as a narrative presence, blurring the line between authorship and story. The experiment highlights both promise and pitfalls: originality, credit, and accountability become murkier, while AI’s statistical tendencies risk flattening distinctive voices.

Narrative Systems

If authorship disperses, narrative itself becomes unstable. Stories operate less like texts and more like adaptive systems. Guy Maddin’s Seances, co-created with the National Film Board of Canada, makes this instability explicit. Each screening is a one-off, algorithmically assembled short film from an archive of pre-shot footage—then erased after viewing. With billions of permutations, the work shifts attention from definitive story to generative process.

The Diary of Sisyphus, written entirely by GPT-NEO, extends the experiment into feature-length cinema. Critics called it “mechanical poetry”—slow, surreal, uneven, but philosophically resonant. The film proves AI can sustain narrative at scale, but coherence and emotional depth remain fragile.

Hidden Door, an AI storytelling game in early access, takes the opposite approach. Players move through structured “story cards” and guided arcs designed to constrain generation. The system preserves coherence but sacrifices surprise. The trade-off points to a broader design tension: how much freedom can be given to generative systems before narrative breaks down?

Personalization and Cultural Meaning

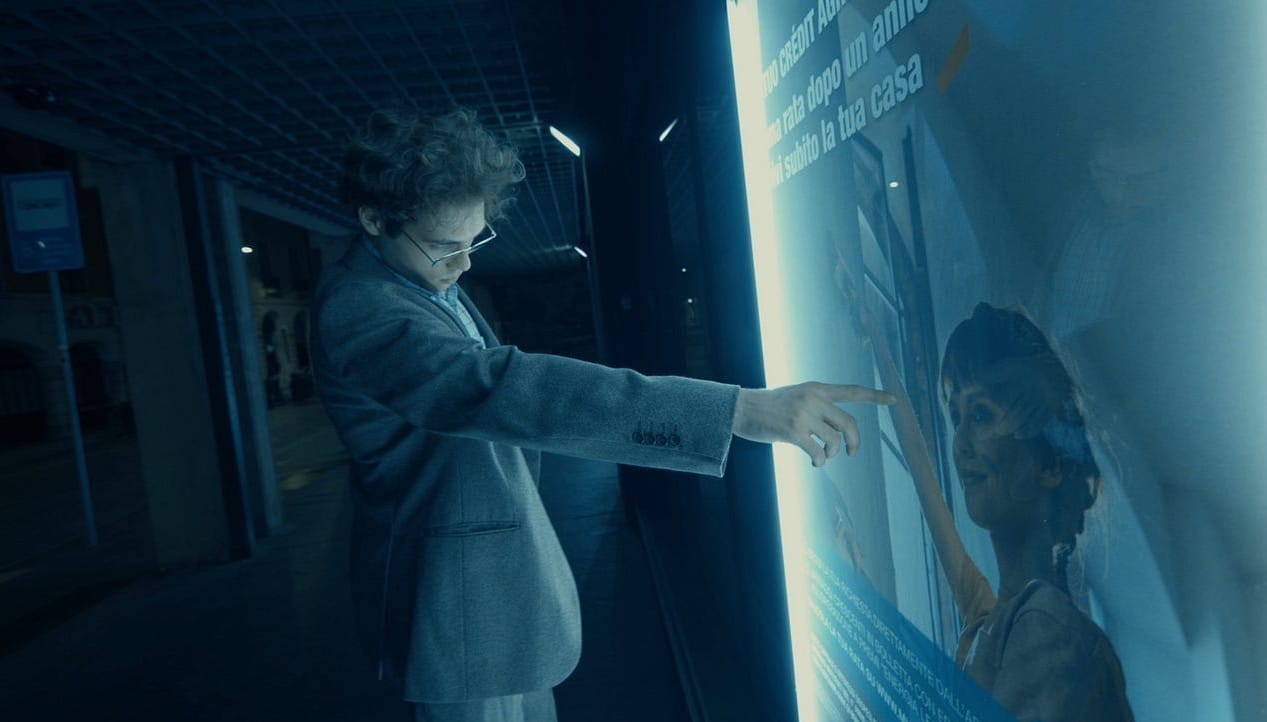

Generative storytelling also alters how audiences experience text. Instead of a shared version, each person may encounter a personalized flow shaped by preferences or interactions. Google DeepMind’s ANCESTRA, which premiered at Tribeca, shows this hybrid model. Developed with Primordial Soup, it combines live-action with AI-generated sequences from DeepMind’s Veo and Imagen models. The film demonstrates how human and machine imagination can merge seamlessly. Yet it surfaces a cultural dilemma: if every narrative can splinter into individualized versions, what happens to stories as collective reference points?

The Wizard of Oz reimagining at Las Vegas’s Sphere takes another approach. Using generative AI to restore and upscale the 1939 classic into a 16K immersive projection, the project reintroduces a cultural icon through spectacle. Supporters see revitalization; critics call it revisionism. The question lingers: how much alteration before a classic ceases to be itself?

The Dream Within Huang Long Cave pushes personalization into intimacy. Visitors interact with YELL, a virtual avatar modeled on the artist’s father, whose dialogue adapts to participants in real time. Here storytelling becomes psychologized, raising ethical questions about emotional influence when narrative is mediated by AI.

Designing for Difference

The path forward lies in hybrid practices that shape AI critically rather than consume it passively. Seances and Hidden Door show how design can balance contingency and coherence. Warlord and Uncanny Valley illustrate both the allure and the complications of collaborative authorship. Huang Long Cave points to data-driven narrative experiments where ethical stakes are inseparable from aesthetics.

The debate is no longer whether AI belongs in storytelling—it already does—but how it should be used. Will generative systems churn out formulaic variations, or can they become frameworks for inquiry, collaboration, and genuinely new narrative forms? Generative storytelling is not just a tool for faster scripts or infinite content. It is reshaping the logic of narrative itself: distributing authorship, destabilizing structure, and personalizing meaning. The challenge is not whether AI can tell stories, but what kinds of stories we want it to tell—and what it means to tell them together.