While the concept of smart glasses isn't novel, previous attempts haven't gained widespread adoption. Various iterations have been made, including Google Glass, North's Focals glasses, Bose's discontinued audio AR sunglasses, the Ray-Ban Meta smart glasses with beta AI features, and most recently, Oppo’s Air Glass3. However, Brilliant Labs' latest glasses appear particularly intriguing as they are expected to be entirely open source and customizable, offering users greater flexibility compared to existing options.

Brilliant Labs new smart glasses will incorporate multiple AI models that can offer web search and translation capabilities alongside AI visual analysis in a slick open-source wearable. As depicted in a video shared by Brilliant Labs, users can utilize their voice commands to instruct the glasses to perform various tasks. These tasks range from identifying landmarks in view and searching the web for specific items like sneakers to retrieving nutrition details of food items. The information is displayed as an overlay directly on the lens.

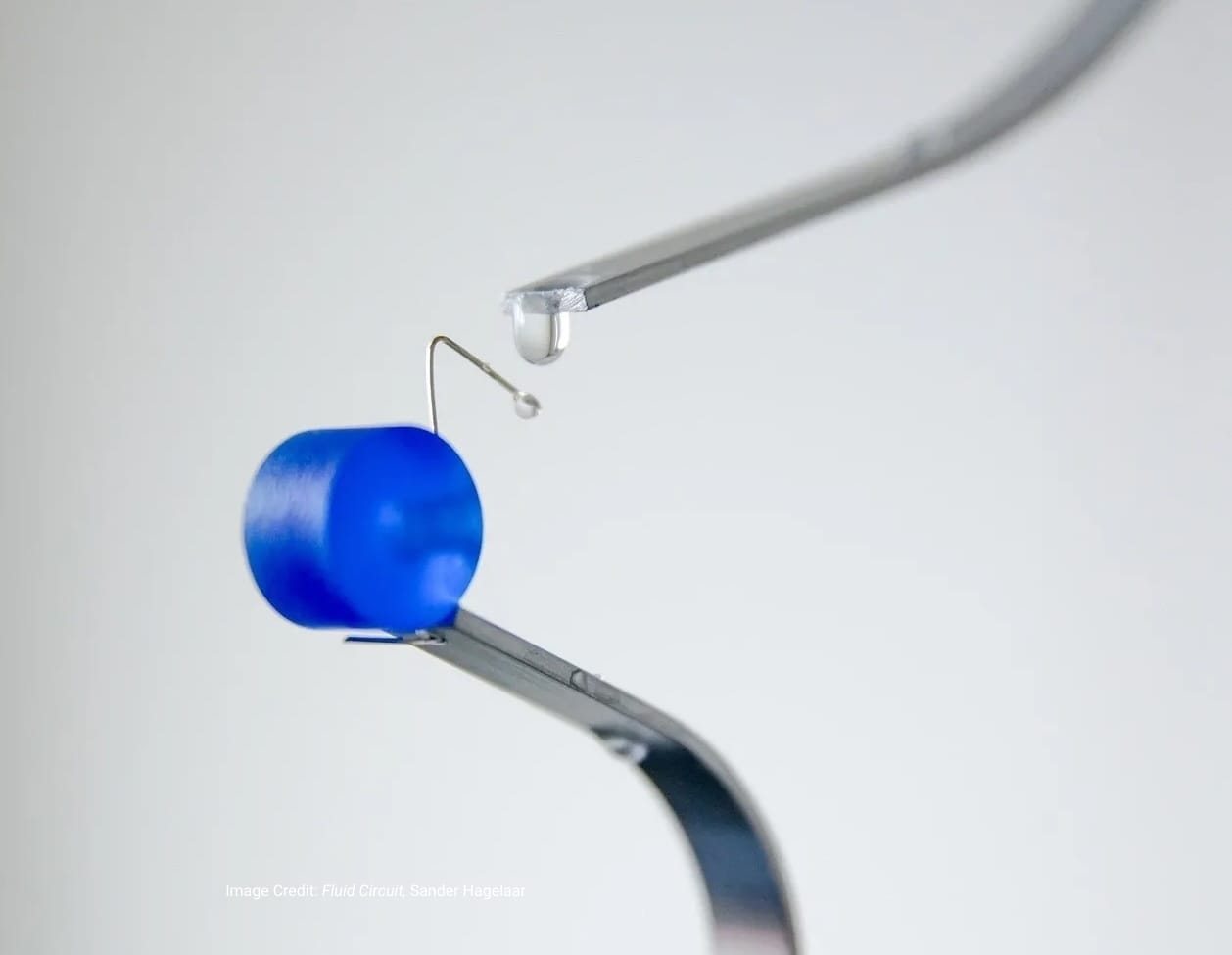

The glasses are equipped with a camera for both object recognition and photo and video capture, as well as a microphone for voice interaction.

What does this mean for you? Well, when out shopping, dining, or in transit, you can access an array of information instantly. You can check whether or not a product was ethically sourced, the nutritional value of your next meal, landmark identification, or live real-time language translation. The information is shown on a micro-OLED display that uses a "geometric prism."

Brilliant Lab’s app Noa, paired with their frames, features an AI assistant powered by OpenAI for visual analysis, Whisper for translation services, and Perplexity for web searches. In an interview with Venture Beat, Brilliant Labs stated that its Noa AI "learns and adjusts based on both user interactions and the tasks assigned to it."