For decades, the screen has been the primary surface of interaction: from CRTs to smartphones, pixels have defined how we access information, navigate tools, and communicate. But design futures are increasingly shifting away from glass rectangles. Gesture, voice, and multimodal systems are positioning themselves as the next frontier—interfaces less about tapping icons and more about embodied interaction.

From Screens to Embodied Interfaces

Touchscreens revolutionized personal computing by making interaction tactile, but they are now reaching their limits. Fatigue, distraction, and ergonomic constraints show that screens are not always the best interface for work, play, or learning. Smart speakers, mixed reality headsets, and sensor-rich environments suggest alternatives where voice, gesture, and environmental cues become primary channels.

Gesture recognition, once confined to gaming experiments like Nintendo’s Wii or Microsoft’s Kinect, is maturing into infrastructure. Apple’s Vision Pro, released in 2024, sets a new benchmark by relying on micro hand gestures and eye tracking as its primary navigation method. Instead of controllers or keyboards, interaction is choreographed directly through embodied cues. Advances in platforms like Ultraleap further extend hand-tracking precision for VR/AR and training simulations, showing how gesture is moving from novelty to infrastructure.

Voice is undergoing a similar transformation. Early smart assistants revealed the limits of rigid command systems, but large language models now enable more conversational and adaptive voice interaction. Google’s Project Euphonia pushes this further, training systems to better understand atypical speech patterns—making voice a more inclusive modality. These advances not only transform accessibility but also enable hands-free workflows across fields from surgery to manufacturing.

Designing Multimodal Futures

The trajectory is not gesture or voice—it is multimodal interaction. The most powerful systems combine input types: sketching with gestures, refining with voice, finalizing on screens. A surgeon might navigate 3D models with hand motions while dictating notes, while a designer could manipulate virtual forms in space while using spoken commands to control tools.

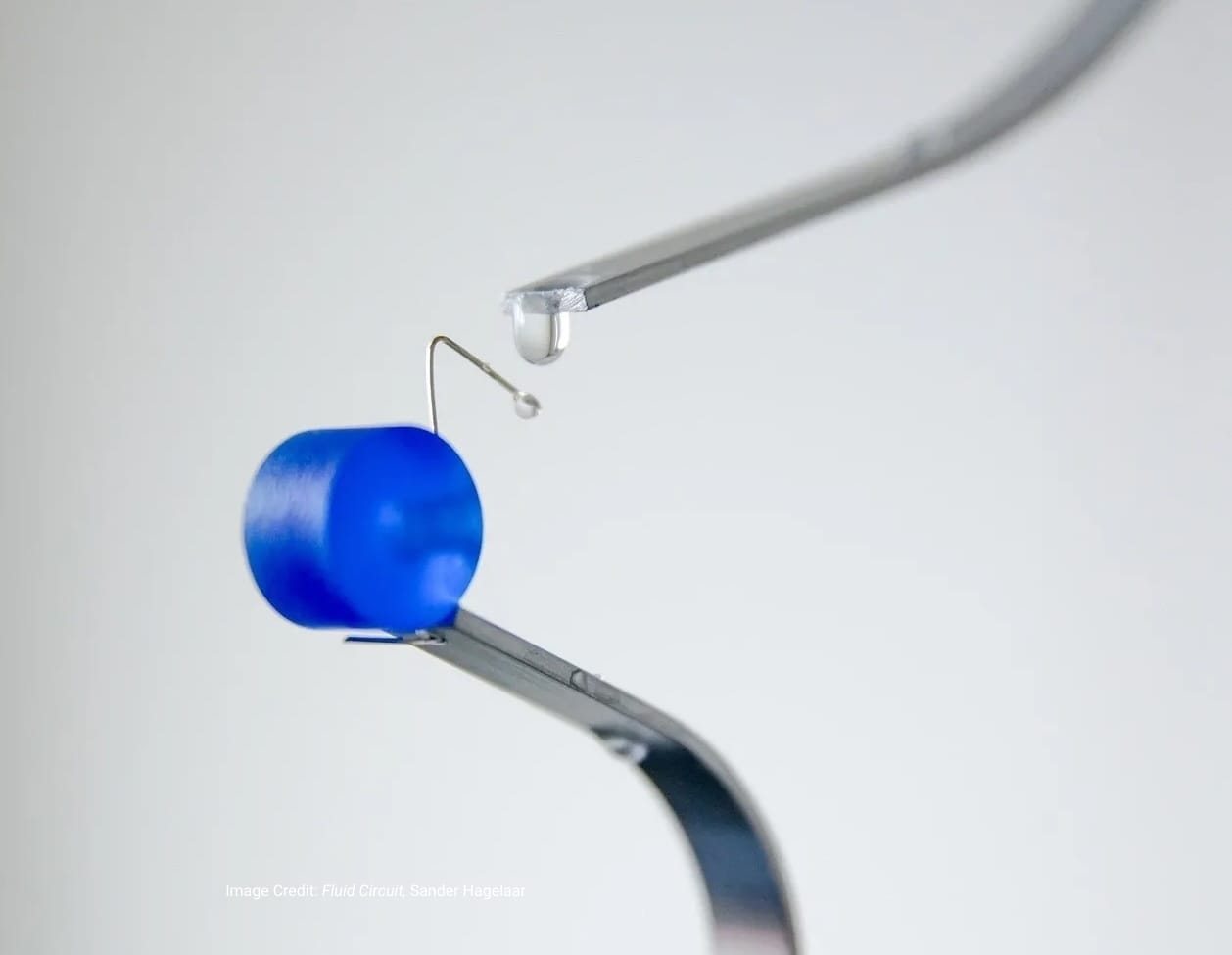

Research labs are actively prototyping these futures. The MIT Media Lab’s Fluid Interfaces Group has developed projects such as AlterEgo, a wearable device that interprets silent speech through neuromuscular signals, and the Reality Editor, which uses augmented reality overlays to let users control IoT devices through spatial gestures. At Disney Research, projects like EM-Sense explored how a smartwatch could recognize objects by detecting their electromagnetic signatures. Taken together, these initiatives illustrate how multimodal systems—combining gesture, wearables, and environmental sensing—are moving toward context-aware, embodied computing.

On the developer side, XR design toolkits from Unity and Unreal are providing frameworks for combining gaze, voice, and haptic input, giving designers the building blocks for multimodal ecosystems. For practitioners, this shift demands a new design vocabulary. Wireframes and static flows give way to movement scripts, sonic cues, and conversational prototypes. Instead of designing only for visual layout, designers must choreograph how different inputs complement each other in sequence, ensuring they work as a coherent interaction rather than competing signals

Ethics, Bias, and Access

The promise of post-screen interaction carries significant risks. Gesture and voice interfaces often depend on always-on cameras and microphones, raising privacy and surveillance concerns. Voice recognition systems still struggle with accents and dialects, and gesture tracking can misinterpret across body types or cultural norms.

Inclusive design requires broad datasets, intentional testing, and safeguards against bias. Initiatives like Mozilla Common Voice, which builds open datasets for training speech models across languages and dialects, are critical steps toward equity. Designers must also resist novelty for novelty’s sake, ensuring that new modalities genuinely reduce friction and expand access rather than complicate interaction.

Toward Ambient and Adaptive Environments

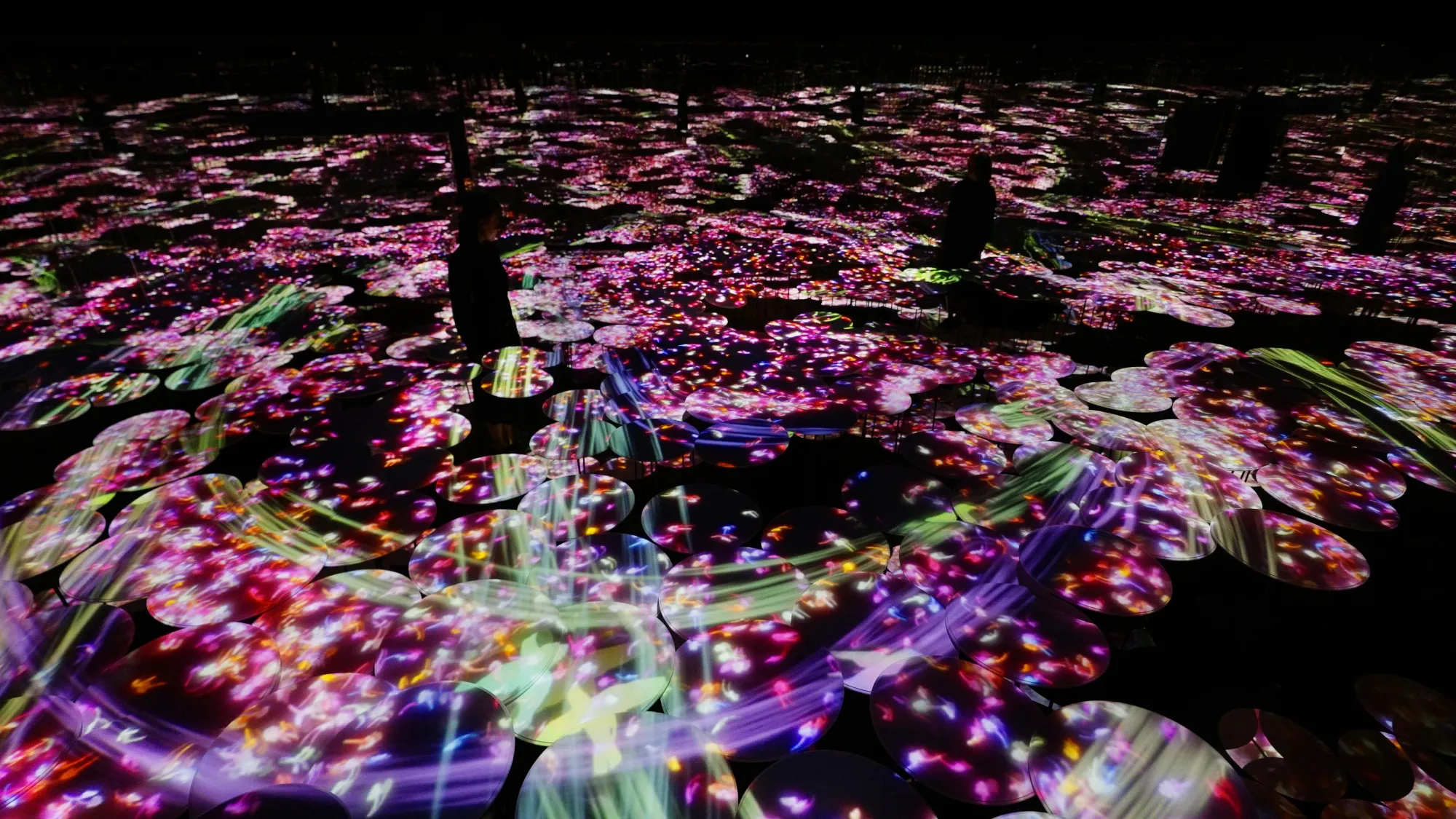

The long view points to computing dissolving into environments. Screens will remain useful but will be only one part of a distributed interface ecology that includes sensors, AI agents, and adaptive spaces. The emphasis shifts from devices to presence: how interaction aligns with natural human movement and communication. For creative practice, this unlocks new possibilities. Installations like Random International’s Rain Room (2012), where human movement alters environmental behavior, hint at how embodied interaction can transform cultural experience. Projects such as TeamLab’s Borderless in Tokyo, where digital projections respond to visitor movement, or Meow Wolf’s Convergence Station in Denver, where immersive installations adapt to presence and touch, further illustrate how design is becoming environmental, adaptive, and multisensory.

Design tools that allow manipulation of 3D models in midair or voice-responsive museum guides point toward environments that adapt dynamically to visitors. Done well, these systems reduce friction and make technology feel less like a barrier and more like an extension of human expression.

From Pixels to Presence

Gesture and voice are not simply add-ons to existing systems; they are reconfiguring the grammar of interaction. As design moves beyond the screen, it becomes more embodied, contextual, and multisensory. The challenge is to choreograph these new interactions with cultural sensitivity, accessibility in mind, and awareness of surveillance risks. The future of design will not be measured in pixels alone but in how effectively we shape the invisible interfaces that surround us.