In the subterranean shadows of our ecosystems, slime molds navigate without brains, neurons, or algorithms—yet they solve complex problems, from mazes to nutrient optimization, with uncanny precision. While scientists have studied these decentralized organisms for over a decade, their relevance is taking on new life in the age of AI. Engineers, designers, and researchers are now reexamining these biological models as inspiration for intelligent systems that move beyond silicon and code. From slime molds to bee swarms, the natural world is shaping a new generation of responsive, self-organizing technologies—ones that don’t just process information, but adapt, evolve, and even feel.

This is not your traditional machine learning story. We're not talking about bigger models or better GPUs. We’re talking about systems that pulse, ooze, adapt, and evolve. Responsive AI—built on principles of biomimicry—doesn’t just mimic the natural world’s forms; it borrows its logics and lifeways.

From Muck to Machine

One of the most celebrated non-neural intelligences in nature is Physarum polycephalum, the single-celled yet multinucleate slime mold. Without a brain, this yellow blob can anticipate periodic events, optimize networks better than some computer algorithms, and even display rudimentary forms of memory. In 2010, Japanese researchers replicated the Tokyo rail system using Physarum, demonstrating its ability to find the most efficient routes between food sources. It’s no wonder engineers see promise.

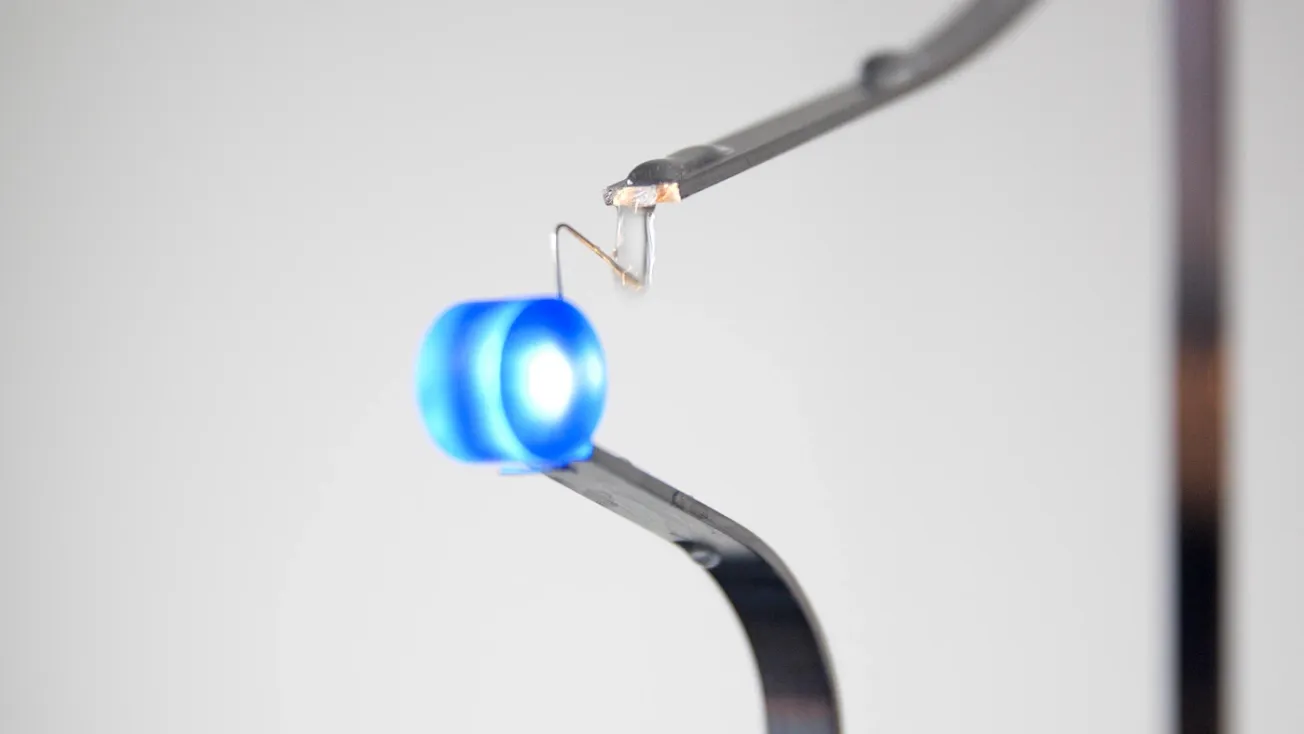

Roboticists at MIT and the University of Bristol are exploring soft robotics based on slime mold movement—shifting the paradigm from rigid code to embodied adaptability. These robots don’t execute preprogrammed tasks; they feel, sense, and flow, responding to environmental feedback in real time.

Thinking Outside the Neural Net

For decades, artificial intelligence has been synonymous with ever-larger models and faster processors. But as AI moves into the physical world—controlling robots, wearables, and interactive spaces—it must become more embodied, responsive, and distributed.

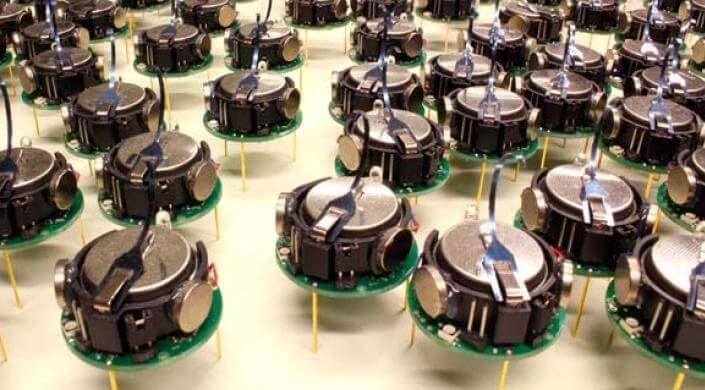

Swarm robotics takes inspiration from bees, ants, and birds. No one bot knows the whole picture, but through local interactions, the group achieves complex goals. Do we tend to mistake centralization for intelligence? Perhaps in speculative architectures of computation, nature can show us that intelligence often arises from the bottom up—from many small, simple interactions.

These concepts don’t just reshape machines—they reshape metaphors. Instead of comparing intelligence to a brain, we’re comparing it to a colony, a network, a responsive membrane.

Bio-Hybrid Interfaces

In the hands of artists, the implications go beyond performance and efficiency—they become aesthetic, ethical, and deeply embodied.

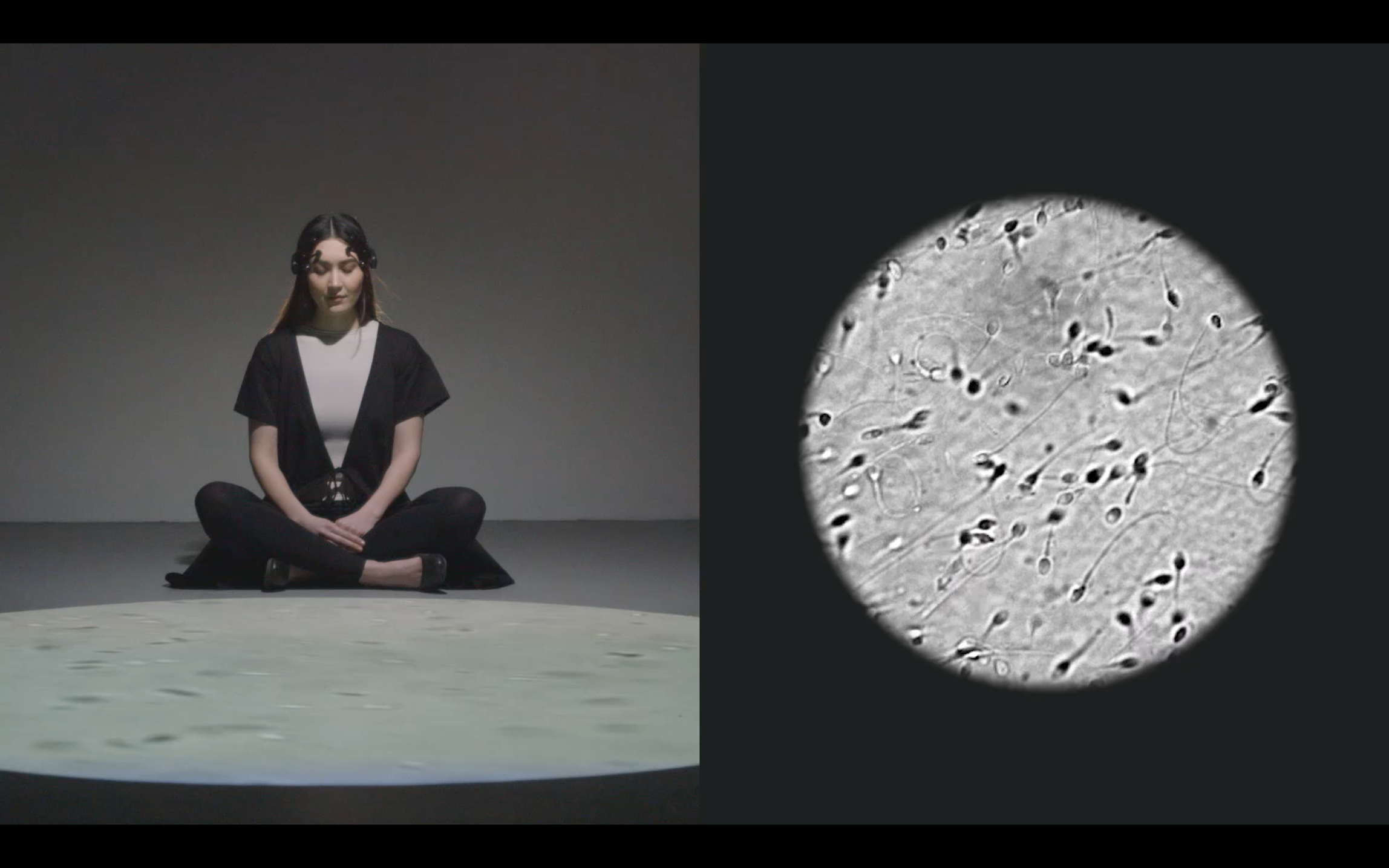

Ani Liu explores the messy overlap between biology, gender, and computation. In her project Mind Controlled Spermatozoa, she merges synthetic biology with interactive design, confronting cultural anxieties around control, reproduction, and machine agency. “I am an artist that practices at the intersection of art and science, and while the research component is very important to me, I think it is the emotional narrative that draws you in,” she says. Her installations invite viewers to experience interfaces that bleed, sweat, or grow—emphasizing affect over efficiency.

Špela Petrič, a trained biologist turned artist, pushes that premise further. Her work often involves direct interaction with nonhuman organisms—plants, microbes, slime molds. In PL’AI, she connects plants to robotic systems that “play” with them, establishing a feedback loop where plant behavior guides machine movement. Petrič treats biological systems not as metaphors but as collaborative intelligences, challenging anthropocentric views of technology.

Machines That Learn by Feeling

More than ever, artists are using bioinspired AI to explore identity, surveillance, and power.

Heather Dewey-Hagborg, known for Stranger Visions—where she used DNA traces to reconstruct speculative faces—now investigates the eerie overlap of AI, facial recognition, and genetic privacy. In works like Probably Chelsea, she uses computational processes to generate dozens of potential faces from a single genome, revealing the algorithmic biases in how we define identity. If there is no objective face, is it only interpretation, and is that interpretation always political?”

Her projects underscore a key theme in responsive AI: subjectivity. These systems aren’t neutral. They reflect the biases of their training data, the assumptions of their designers, and the environments they operate in.

Alexandra Daisy Ginsberg, meanwhile, explores what it means to simulate lost ecosystems. In The Substitute, she uses AI to recreate the extinct northern white rhino, challenging audiences to reflect on technological resurrection and artificial memory. Her work questions whether machines can truly restore what we've erased—or if they simply aestheticize our guilt in higher resolution.

The Creative Opportunity

For future-facing creatives, the emergence of responsive, biomimetic AI isn’t just a technological shift—it’s a design invitation. It demands new aesthetics, ethics, and materials.

Here are three entry points for creative practitioners:

- Start with Material Curiosity

Explore biodesign platforms like OpenWetWare or Grow.bio for sustainable, responsive materials that interface with the environment. - Think in Systems, Not Objects

Draw from fields like cybernetics, permaculture, or ecological design to frame projects as dynamic processes, not static artifacts. - Collaborate Across Kingdoms

Join forces with mycologists, chemists, or roboticists. The future is fungal, fluid, and deeply collaborative.

Rethinking Intelligence

At its core, biomimetic AI invites us to ask: What if intelligence isn’t above us, but all around us? What if thinking doesn’t always mean predicting—but sensing and responding?

As Dewey-Hagborg notes, “The more we embed intelligence into our world, the more we must consider what kind of world we are embedding it into.”

In other words, the future of AI might not look like us—it might resemble a colony of ants, a puff of spores, or a thinking puddle of slime.