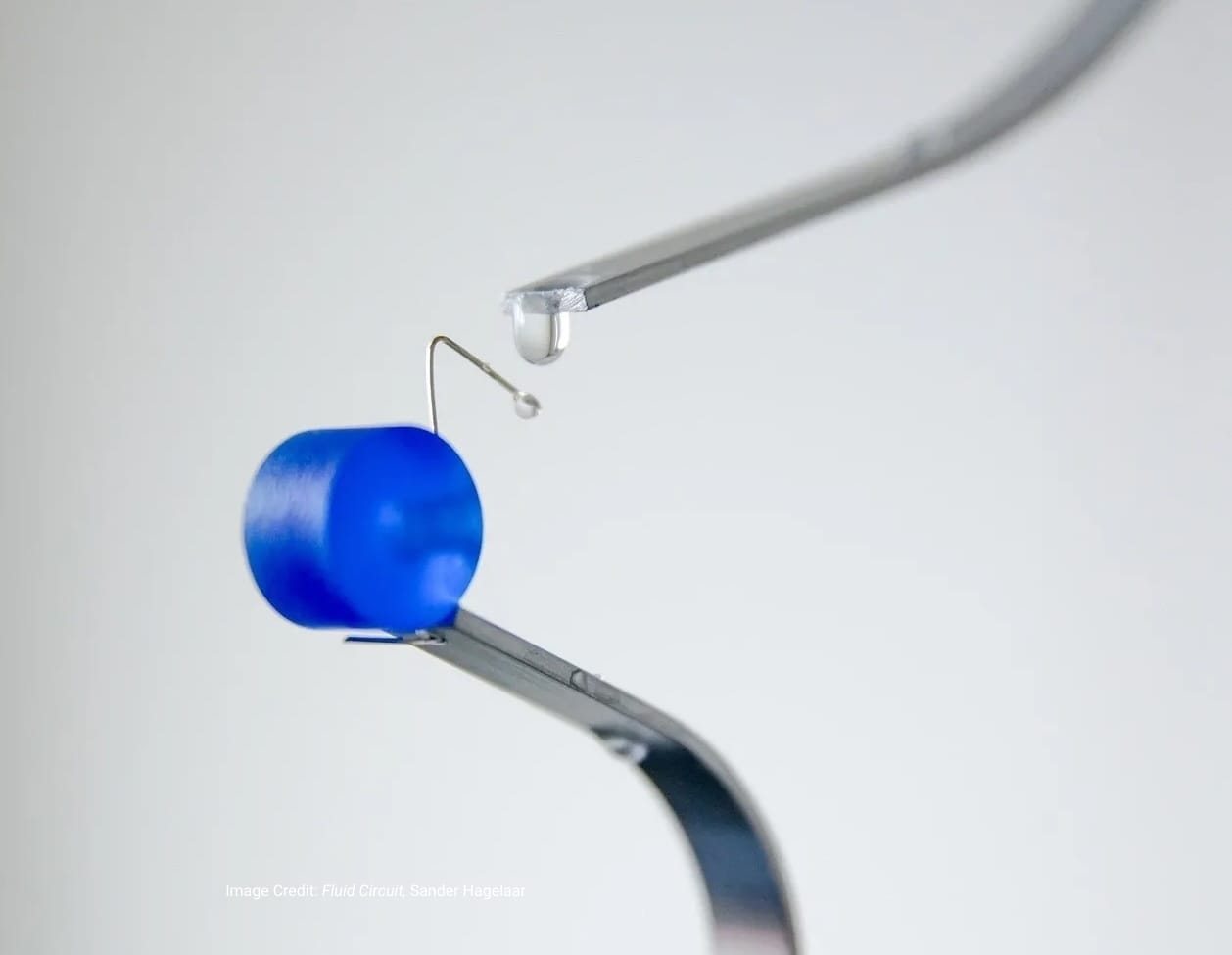

Industrial designer Ross Lovegrove has teamed with Google DeepMind to chart a new territory where generative-AI meets industrial prototyping. According to a blog post from the research lab, the collaboration involved fine-tuning a text-to-image model (based on Imagen) with a curated dataset of Lovegrove’s sketches and design lexicon — enabling the system to internalise his signature biomorphic forms.

The project selected a chair — a conventional object with defined function but open formal possibilities — as the testbed. Lovegrove’s studio, in concert with DeepMind engineers, developed a design vocabulary and deliberately avoided the word “chair” in prompts to coax novel outputs. From concept output to physical manifestation, the workflow passed through AI generation, material and viewpoint exploration using Google’s Gemini tools, and then to metal 3D printing.

The outcome: a tangible prototype that embodies Lovegrove’s aesthetic, yet extends it through human-machine co-creation. The case indicates a shift in industrial-design workflows — not AI replacing the designer, but augmenting the designer’s generative capacity. As Lovegrove puts it, “For me, the final result transcends the whole debate on design. It shows us that AI can bring something unique and extraordinary to the process.”

For design professionals, this signals that mastering prompt-crafting, semantic modelling of a design language, and physical-manufacturing pipelines are becoming core skills. In operational terms, the experiment maps a workflow: dataset → fine-tuned model → prompt-driven generation → CAD refinement → additive fabrication — a template for future studio-AI collaborations.