For decades, the Turing test has stood as the benchmark for evaluating a computer's ability to exhibit human-like intelligence. The concept of a machine passing this test without possessing artificial general intelligence (AGI) seemed distant, until the advent of large language models (LLMs) such as GPT and Bard. These models have raised questions about the relevance of the Turing test and the ethical implications of creating intelligent machines that blur the lines between human and machine.

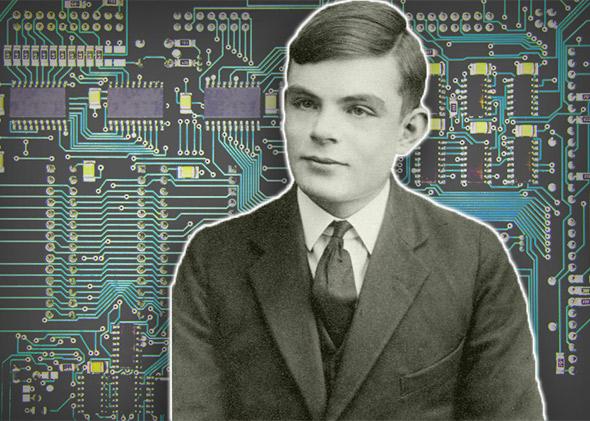

The Turing test, introduced by Alan Turing in 1950, proposed a deceptively simple yet profoundly challenging criterion: if an AI system can engage in a conversation indistinguishable from that of a human, it can be considered intelligent. While the Turing test has been the gold standard for assessing AI's conversational abilities, it also raises several vital questions in the modern context.

Artificial intelligence has come a long way since Turing's time. Machine learning, deep learning, and natural language processing have advanced to the point where AI-driven chatbots and virtual assistants can hold convincing conversations. But the issue is no longer about whether AI can mimic human conversation; it's about what AI should do and what it should not. One of the most pressing concerns surrounding AI is its ethical application. The power of AI can be harnessed for both good and ill, from improving healthcare and solving complex problems to perpetrating cybercrimes and manipulating public opinion. The need for a benchmark is less about a machine's ability to mimic a human and more about its ability to respect ethical boundaries.

An AI Turing test could serve as a threshold to ensure that AI systems operate within ethical parameters. It would test not just linguistic abilities but also the system's understanding of ethics and its commitment to avoiding harm. This is particularly relevant as AI is increasingly integrated into fields like autonomous vehicles, healthcare, and finance, where ethical decision-making is paramount. Artificial general intelligence (AGI), often portrayed in science fiction as machines with human-like reasoning and decision-making abilities, remains a distant goal. Current AI systems are specialized in particular tasks, and they lack the broad generalization and reasoning capabilities of humans. An AI Turing test must consider this limitation.

The question is whether we should judge AI based on human standards or if we should create new metrics tailored to AI's capabilities and limitations. After all, AI excels in data processing, pattern recognition, and repetitive tasks, but it falls short in terms of empathy and complex ethical reasoning. The issue of bias in AI systems has been a prominent concern. AI algorithms can inherit the biases present in the data they are trained on, leading to discriminatory outcomes. A robust AI Turing test should evaluate an AI system's ability to recognize and rectify biases, promoting fairness and equity. Moreover, such a test could gauge AI's capability to adapt to evolving ethical standards, making it a proactive force in maintaining ethical standards rather than a passive one that merely follows predefined rules.

As AI becomes increasingly integrated into our lives, trust is a critical factor. An AI Turing test, beyond evaluating AI's conversational capabilities, could focus on its transparency. AI should be able to explain its decisions, particularly in situations where lives, finances, or critical decisions are at stake. Building trust in AI means enabling users to understand how and why AI systems make the decisions they do. Transparency can help dispel the mystique of AI, fostering a deeper connection between humans and machines.

While an AI Turing test can be a valuable metric for evaluating AI's conversational and ethical abilities, it is not a panacea. It may not account for AI's limitations and peculiarities. AI has its unique ways of processing information, and a conversation is only one facet of its capabilities. Additionally, we must avoid the trap of anthropomorphizing AI. AI is not human, and it should not strive to be. The focus should be on AI being the best version of itself, complementing human abilities rather than replicating them.

The notion of an AI Turing test is a pertinent one as we confront the profound ethical and practical challenges posed by AI. It underscores the need for AI not just to mimic human conversation but to understand and respect human values and ethics. As AI algorithms and their applications develop further, the conversation should shift from merely measuring AI's human likeness to evaluating its impact on society, its ethical decision-making capabilities, and its transparency and adaptability. An AI Turing test redefined for the 21st century, could serve as a vital tool in shaping AI's future, ensuring that it aligns with human interests and values while respecting its unique potential.