Cultural circulation online is often framed as frictionless distribution: publish, post, upload, and consumed. In practice, visibility is governed by ranking systems, recommendation engines, and moderation pipelines that sit between creators and publics. Platforms such as TikTok, Instagram, and YouTube operate less like neutral conduits and more like infrastructural curators, determining what surfaces, what trends, and what disappears.

Algorithmic visibility is not simply a technical feature. It is a system of cultural governance embedded in code, policy, and economic incentives. Understanding who controls cultural circulation online requires examining how these systems rank, filter, monetize, and increasingly generate content.

From Chronological Feeds to Algorithmic Governance

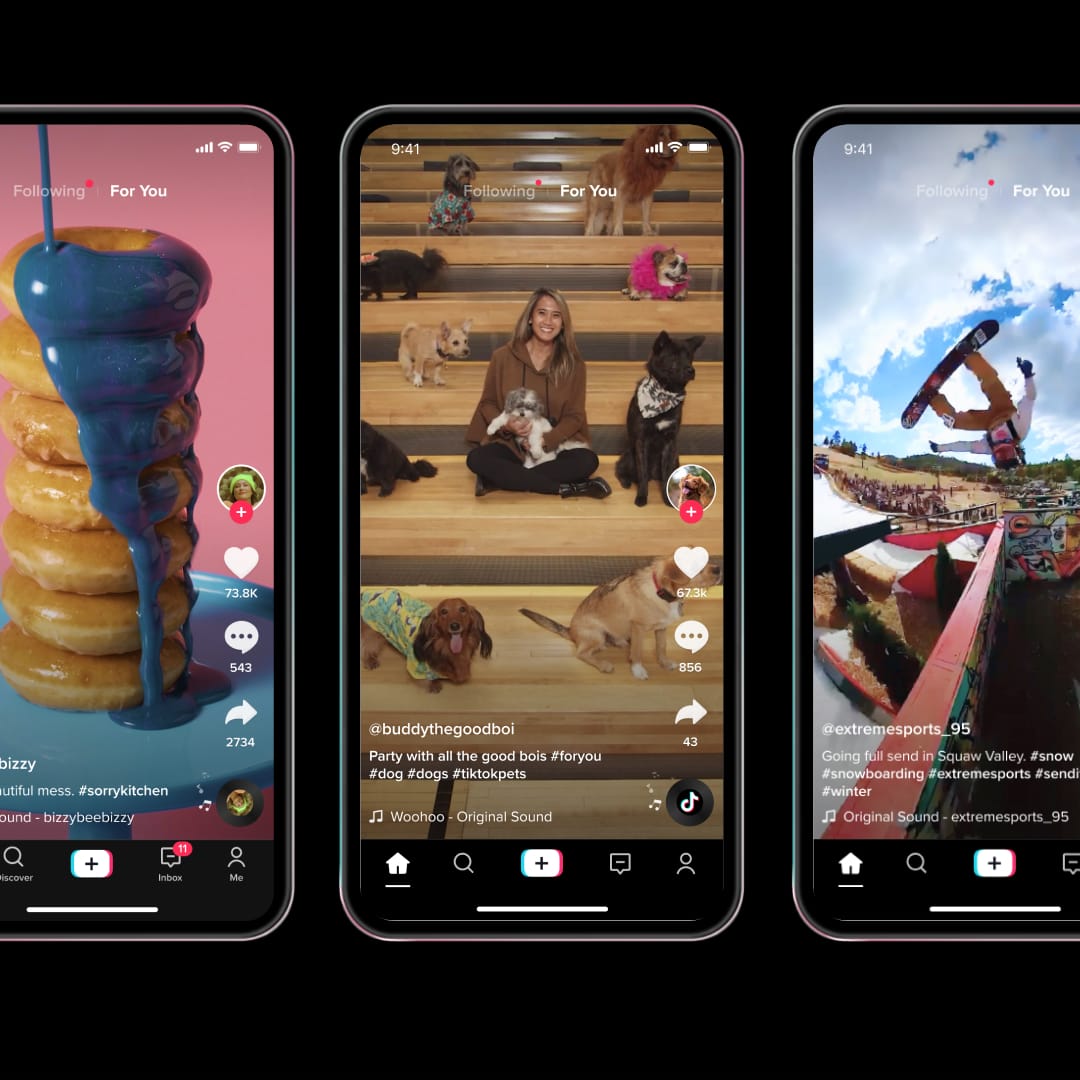

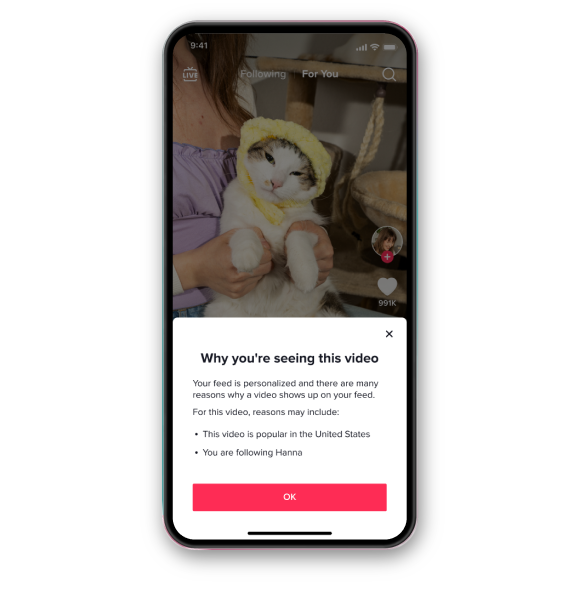

Many major social platforms originally defaulted to reverse-chronological feeds, but over time shifted toward recommendation-driven ranking optimized for engagement signals. YouTube has described its recommendation system as using signals including clicks, watch time, and measures of viewer satisfaction derived from surveys. Reports have attributed a widely cited figure—around 70% of watch time—to recommendations, based on public remarks by YouTube’s leadership at industry events. TikTok’s “For You” feed similarly ranks videos using factors such as user interactions, strong interest signals like watch completion, video information (captions/sounds/hashtags), and some device/account settings. Instagram, once anchored in a follower-based chronological feed, shifted to algorithmic ranking and has been described (including by platform explainers and reporting around the 2016 change) as prioritizing predicted interest, relationship, and timeliness when ordering content. These systems do not merely sort content; they shape the conditions under which posts become discoverable.

Optimization as Aesthetic Constraint

Because recommendation systems prioritize metrics such as watch time, retention, and engagement, creators often adapt form and pacing to align with those signals. YouTube has publicly identified watch time and viewer satisfaction as central to its recommendation system. TikTok’s For You feed emphasizes watch completion and interaction signals, while Instagram has described how Reels and other recommendation surfaces rank content based on predicted interest and engagement.

Creator guidance materials frequently emphasize click-through rates, retention curves, and early engagement signals, encouraging thumbnails, titles, and opening seconds that maximize viewer response. When ranking systems reward frequent posting, high retention, and specific formats, creators adjust accordingly. Researchers and commentators have observed that this dynamic can contribute to format convergence over time, as visibility becomes tied to measurable engagement patterns. Monetization systems reinforce these incentives. YouTube’s Partner Program requires minimum subscriber and watch-hour thresholds before revenue sharing is enabled, creating economic gates that influence growth strategies. Visibility and monetization are structurally intertwined: ranking affects revenue potential, and revenue potential shapes production decisions.

The Politics of Amplification and Omission

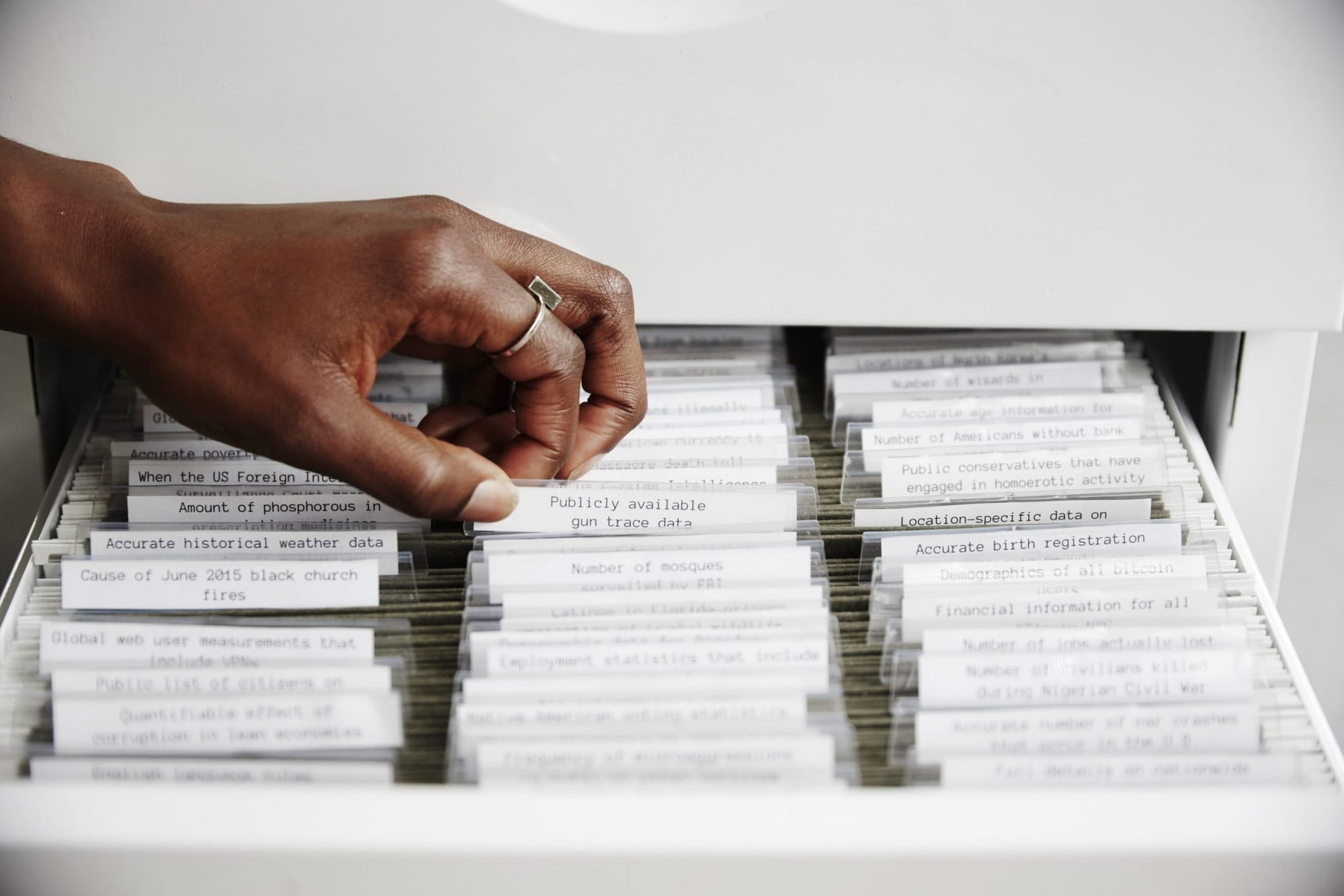

Algorithms amplify some material while marginalizing other forms. This dynamic extends beyond virality to include classification, moderation, and training data. Artist and researcher Mimi Ọnụọha has examined how datasets reflect structural absence, arguing that what is missing can be as consequential as what is included. In the context of platform algorithms, omissions may stem from biased training data, automated moderation errors, or ranking systems whose optimization criteria deprioritize certain types of content. Platforms deploy automated moderation tools to detect hate speech, misinformation, and other policy violations, and publish transparency reports outlining enforcement metrics. However, the specific functioning of these models remains largely proprietary. Independent research has documented false positives and uneven enforcement, including cases in which language associated with marginalized communities is misclassified by automated systems.

Amplification also intersects with generative AI systems. Large language models and image generators are trained on vast corpora of publicly available data. Because widely circulated and highly indexed content is more likely to be scraped into training datasets, recommendation systems can indirectly influence the composition of future training data. This creates a recursive dynamic in which ranking systems shape what becomes broadly available cultural material, which in turn informs subsequent generative outputs. Such feedback loops can consolidate influence within infrastructures that are neither neutral nor evenly distributed.

Artists and Designers Working on the System

Some practitioners have treated algorithmic systems themselves as material. Hito Steyerl has examined how images circulate through compression, duplication, and digital distribution networks, tracing the relationship between visibility and power. Her essay “In Defense of the Poor Image” analyzed how distribution conditions shape meaning and authority. James Bridle has investigated opaque technological systems, including automated decision-making and machine vision, emphasizing the difficulty of understanding infrastructures that shape public life. Through research and artistic intervention, his work renders such systems visible and frames algorithms as active participants in cultural production rather than neutral utilities.

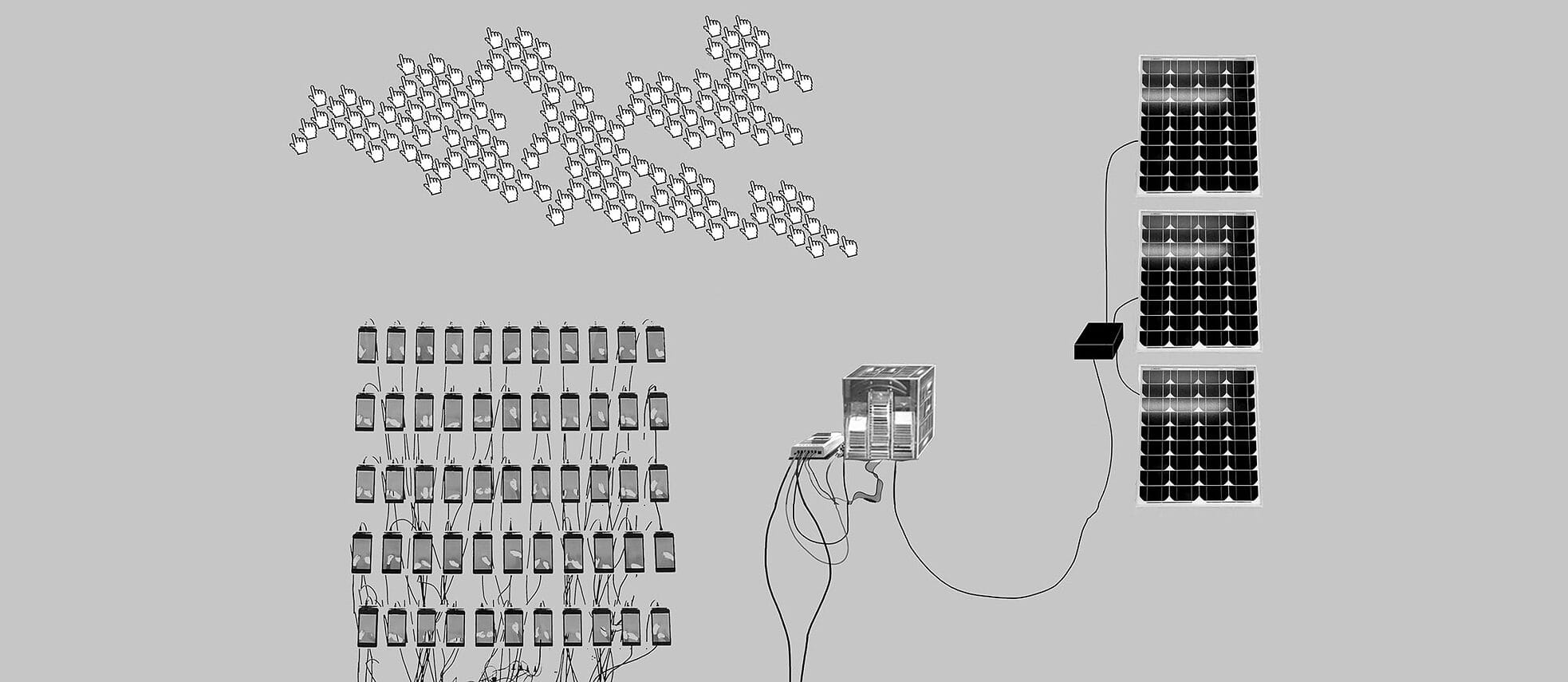

Artists including Tega Brain and Sam Lavigne have engaged directly with APIs, data scraping, and automated platforms, exposing how networked systems sort, classify, and monetize attention. Their projects treat code, metadata, and platform rules as sites of intervention, demonstrating that circulation itself is programmable. These practices do not dismantle platform power, but they surface its mechanics. By foregrounding the infrastructures behind recommendation engines, moderation systems, and data economies, they highlight the importance of understanding how algorithmic systems shape contemporary cultural circulation.

Who Controls Cultural Circulation?

Control over cultural circulation online is shaped by multiple actors, yet concentrated within a small number of dominant platforms. Platform companies design and continually adjust ranking systems. Advertiser requirements influence monetization policies and brand-safety standards. Machine learning engineers tune models using engagement and safety metrics. Users generate the behavioral data that enables personalization. Generative AI systems are trained on large corpora of publicly available content that reflect existing patterns of visibility and circulation.

The result is a multi-layered governance structure in which visibility emerges from interactions between code, policy, and market incentives. Cultural production is shaped at multiple points by recommendation systems and monetization thresholds. Algorithmic visibility has become a structural feature of contemporary networked platforms. As recommendation engines, automated moderation systems, and generative models become more deeply integrated, the central question is not simply whether algorithms influence circulation, but how that influence is designed, audited, and contested.