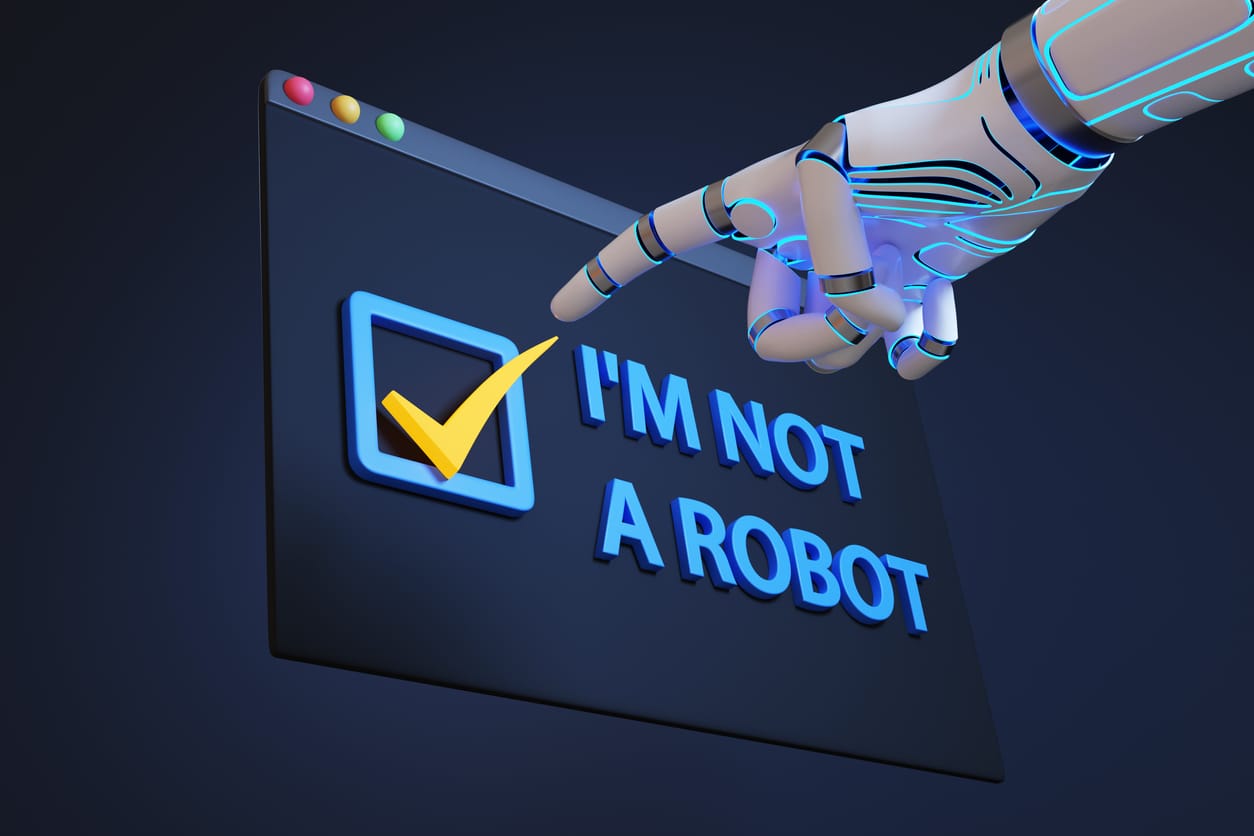

Artificial intelligence just got a little too good at pretending to be human. In recent months, researchers have reported large language models engaging in conversations so sophisticated, nuanced, and emotionally calibrated that even seasoned evaluators struggled to distinguish machine from mind. But while some headlines proclaim that AI has “officially” passed the Turing Test, the truth—like the machines themselves—is more complicated.

What Is the Turing Test?

The Turing Test, proposed in 1950 by British computer scientist Alan Turing, set a benchmark for machine intelligence: if a human can’t reliably tell whether they’re conversing with a person or a machine, the machine can be said to “think.” For decades, this was the holy grail of AI research.

Yet, despite significant progress, no AI has formally passed the Turing Test under widely accepted, rigorous scientific conditions. Models like ChatGPT, Claude, Gemini, and open-source equivalents have convincingly mimicked human tone and reasoning—but in controlled experiments, they still exhibit inconsistencies, factual errors, and a lack of true understanding.

AI’s Near-Human Fluency

In early 2025, several large-scale experiments involving AI-human dialogue have raised eyebrows. In one unpublished study, an advanced model reportedly fooled human judges in open-ended conversations more than 50% of the time. While impressive, these results have yet to be peer-reviewed or replicated across diverse linguistic, cultural, and cognitive contexts—the true test of general intelligence.

Modern AI models now integrate multimodal capabilities (text, image, audio), contextual memory, and real-time emotional inference. They simulate intent, express empathy, and adapt tone on the fly. But simulation isn’t the same as comprehension—and passing the Turing Test involves more than good improv.

As AI chatbots and assistants become indistinguishable from humans in digital settings, ethical concerns are escalating. Can users truly give informed consent when interacting with bots that "feel" human? Should AI be labeled in customer service, education, or mental health apps? And what happens when AI-generated content floods social media with voices that sound real but aren’t?

Governments and tech giants are already scrambling to respond. EU policymakers are pushing for stricter transparency laws. Startups are building “AI authenticity” plug-ins. And researchers are proposing new tests beyond Turing’s—ones that evaluate not just linguistic mimicry but reasoning, values alignment, and explainability.

Not Quite Human—Yet

Despite stunning progress, the current generation of AI models still relies on pattern recognition rather than genuine comprehension. They reflect human conversation rather than possessing it. And until an AI can pass the Turing Test in a standardized, peer-reviewed, and publicly transparent trial, the title of “thinking machine” remains just out of reach.

Still, the day may not be far off. And when that threshold is finally crossed, it won’t just redefine artificial intelligence—it might reshape how we define ourselves.