Meta and YouTube recently instituted policies requiring content creators to disclose when realistic-looking content is generated by AI. This step responds to the global concern that AI and deepfakes could pose a substantial risk during the upcoming elections. The aim is to promote transparency and accountability among content creators, helping viewers to understand the authenticity of the content they consume. However, the effectiveness of these new tools and policies is yet to be fully determined, and their potential broader implications for creatives could prove challenging while opening the door for exploration into critical questions in creative tech.

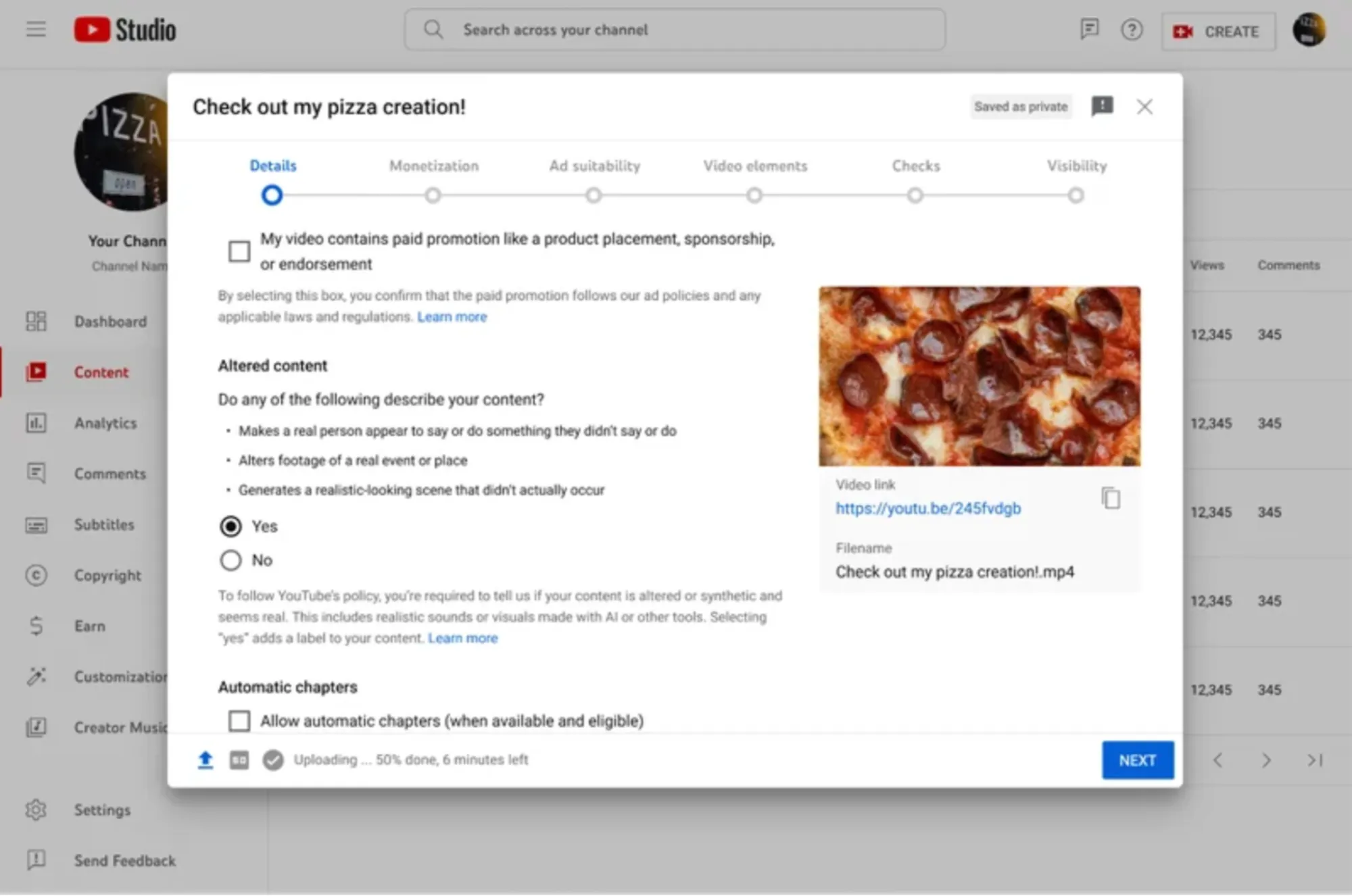

YouTube has introduced a tool in Creator Studio that requires creators to disclose any altered or synthetic media. This move is part of their efforts to combat misinformation and deepfake content on their platform. The tool requires creators to disclose when content, which could be mistaken for real persons, places, or events, was, in fact, created using generative AI or other means of manipulation. While the aim is to help viewers differentiate between what’s real and what’s fake, the rapid influx of new generative AI tools may make this increasingly difficult.

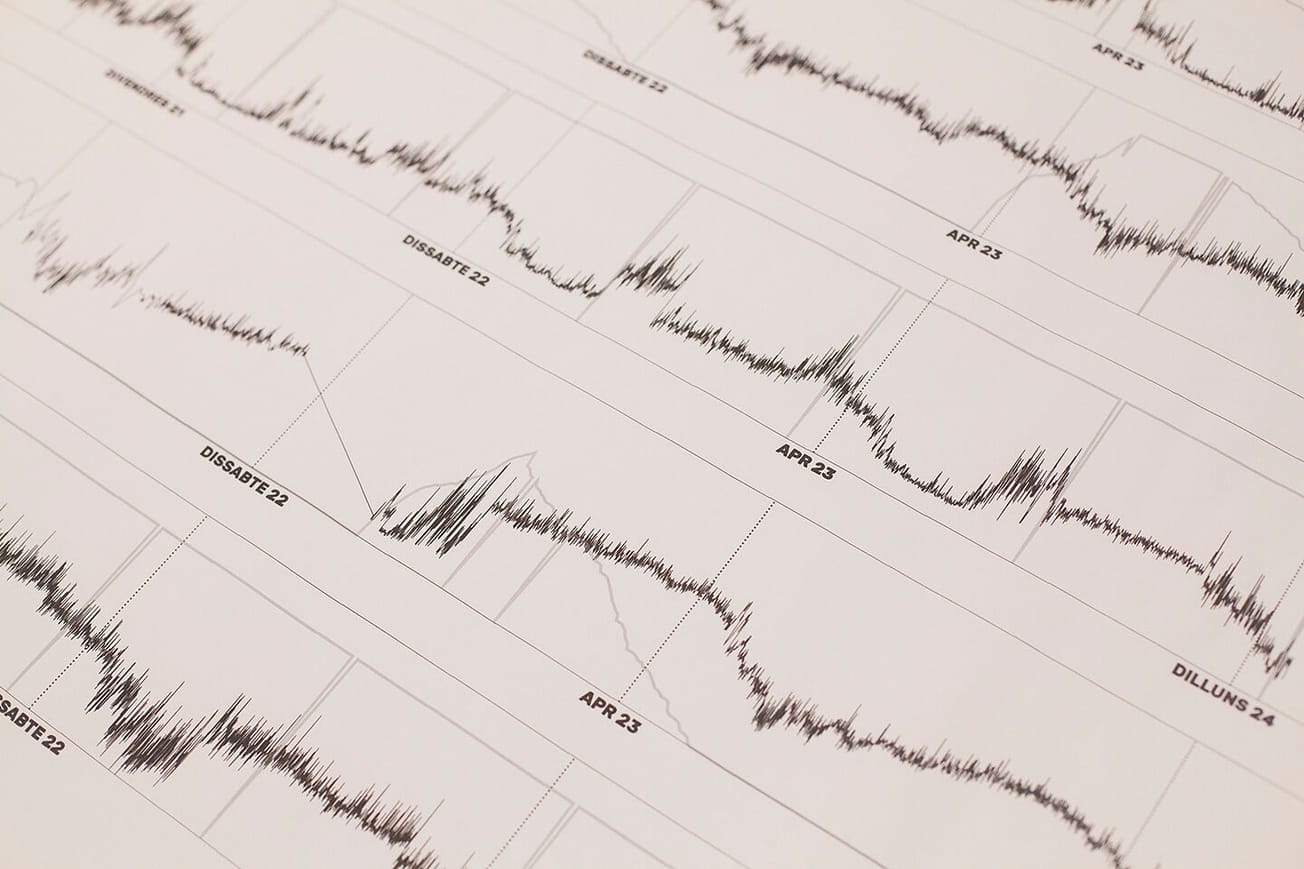

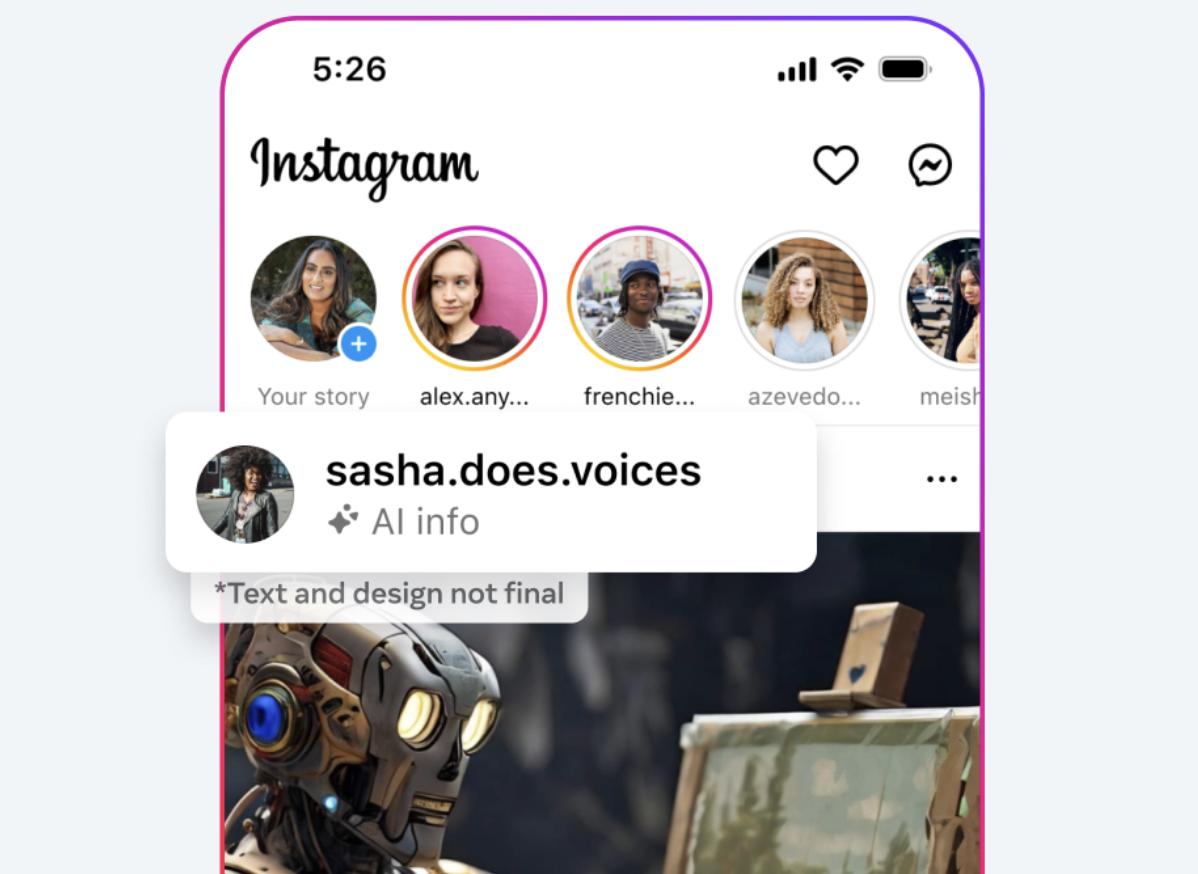

Meta, citing the upcoming elections in over 50 countries, roughly half the world’s population, as a catalyst, is taking significant steps. Nick Clegg, the president of global affairs at Meta, has stated that the company is collaborating with industry partners to set standards. These standards, established through forums like the Partnership on AI (PAI), aim to ensure AI content detection primarily through “invisible markers,” “invisible watermarks,” and embedded metadata. Clegg acknowledges the challenges of ensuring companies and content creators adhere to PAI best practices, stating, “What we’re setting out today are the steps we think are appropriate for content shared on our platforms right now. But we’ll continue to watch and learn, and we’ll keep our approach under review as we do.”

Social media is a core component of our globally networked society and subsequent networked communications. While new policies launched by YouTube and Meta may be a critical move to address issues of misinformation and disinformation by content creators on social media platforms – what are the implications for artists and designers working in this emergent area of generative AI? For artists and designers, this could, in some ways, strike at the core of questions around truth and authenticity – which could, in fact, present an opportunity for future modes of critical and creative inquiry.