Last week, I watched my friend stare at her phone, bewildered. “How did TikTok know I needed to hear that?” she asked. It was a video of a stranger articulating a personal crisis she hadn’t spoken aloud—not even to herself. The algorithm had surfaced it anyway. “It’s like it knows me better than I do,” she laughed. Then she watched it again.

Algorithms were once framed as helpful assistants—curating our feeds, surfacing relevant content, and giving us more of what we liked. But as these systems have evolved, so has their influence. We now live in a world shaped less by our choices and more by what predictive engines decide we’re likely to click, swipe, or binge. We are no longer just browsing the feed—we’re becoming what the feed wants.

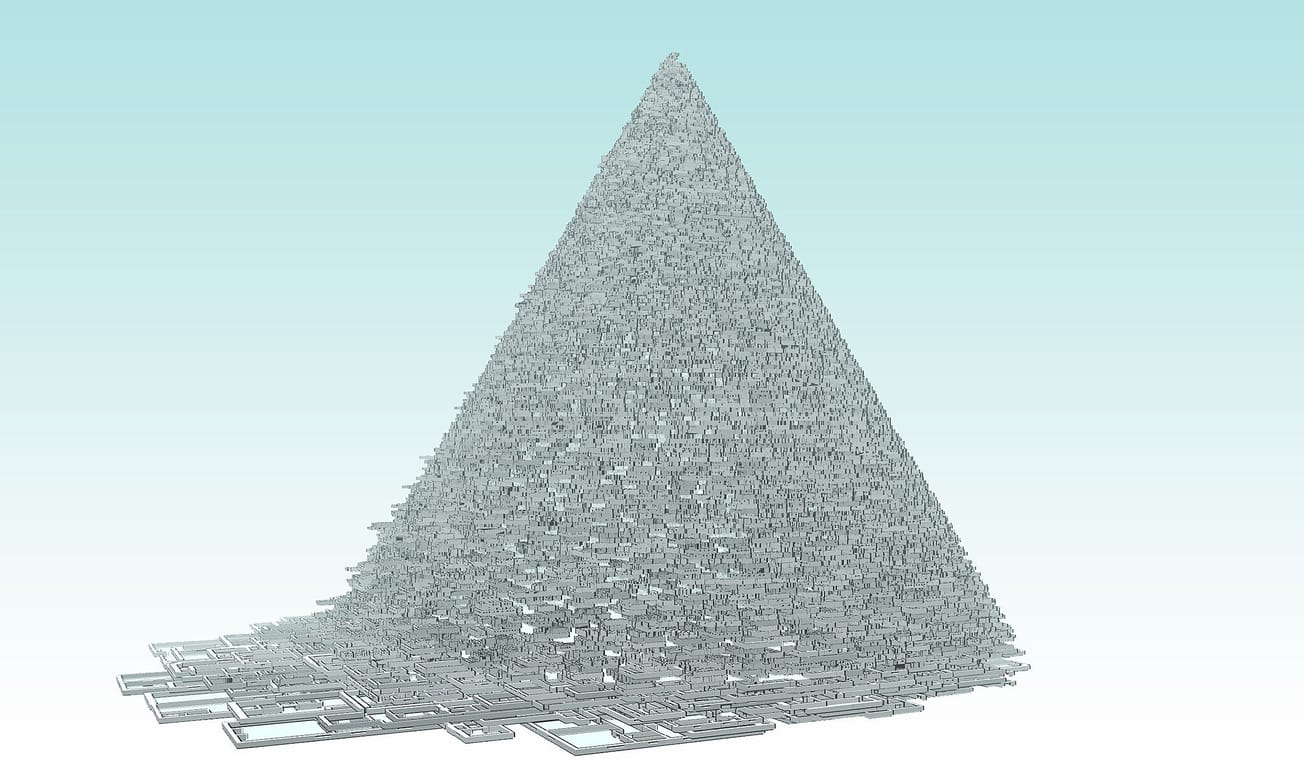

Welcome to the crisis of digital autonomy, where the line between who we are and what we’re shown is increasingly impossible to trace. Our agency is filtered through code, routed through logic gates, and housed in the opaque architecture of data centers we’ll never see.

From Personalization to Predestination

Let’s start with the promise: algorithms were supposed to save us from the chaos of infinite content. Instead of digging through noise, we’d get precision. A custom-tailored internet. But personalization is a slippery slope. The more a system learns, the more it predicts. And the more it predicts, the more it influences.

Spotify knows what songs to play before you realize you’re sad. Netflix auto-queues your next comfort binge before you can question your time. TikTok feeds you entire subcultures based on how long you hovered on one post. This is no longer personalization. This is preemptive shaping of behavior. This is predictive identity design—a kind of soft programming executed at the level of interface.

We Trained the System, Now It Trains Us

Here’s the twist: we built this. Every click, like, pause, and share trained the algorithm. We supplied the feedback loops. But those loops have become behavioral traps.

Social platforms operate on what scholars call persuasive architectures — systems explicitly engineered to elicit specific responses: engagement, addiction, desire, rage. These platforms don’t just respond to us—they modulate us. The problem is not just that the machine is influencing us. It’s that we often mistake its output for our own volition.

Imagine a feedback loop hardcoded into a neural network, endlessly compiling and optimizing. The user thinks they’re navigating a landscape—but they’re running through a maze with invisible walls and auto-generating pathways. Every choice you make reconfigures the floorplan.

The Illusion of Choice

Algorithms don’t erase agency. They obscure it. The TikTok For You Page feels like discovery. Spotify’s playlists feel like taste. But what if your aesthetic, your vibe, your politics, your cultural identity were all subtly nudged into shape by invisible hands?

Take political polarization. Research has shown that engagement-driven feeds amplify extremism because outrage performs better than nuance. But when all you see is content that confirms your beliefs and maximizes your attention, it becomes hard to distinguish what you chose to see from what was engineered to show up.

We are constantly choosing from a set of options we didn’t design. We are constantly choosing from a set of options we didn’t design—an elegantly engineered decision tree where the paths feel infinite but are constrained by code.

The Sovereignty Problem

Let’s call this what it is: a sovereignty crisis.

Sovereignty typically describes a nation’s ability to govern itself. In the digital context, it’s about the power to govern our own attention, identity, and behavior in a system where that power is increasingly outsourced to predictive models owned by private companies.

You don’t need to understand the mechanics of a recommendation engine to feel the consequences. You just need to look at how your feed shapes your friendships, your sense of self, your mood, your worldview.

To be algorithmically sovereign would mean being able to see, critique, and opt out of systems designed to optimize you for engagement, not well-being. But transparency tools are rare. Exit ramps are even rarer. The infrastructure is built for containment, not escape.

A Moment of Acknowledgement

Jack Dorsey, former CEO of Twitter, recently said: “The algorithms are definitively programming us. We are being programmed. These algorithms know our preferences better than us. That's only going to increase.” He made the comment while describing an alternate relationship with technology, wherein we have agency over the algorithum's we chose to use, the statement its nonetheless a bit challenging.

Dorsey’s comment joins a growing list of insider reflections. Chamath Palihapitiya, a former Facebook VP, once admitted he helped build tools that “tear apart the social fabric.” Tristan Harris, ex-Google ethicist, called tech platforms “the most powerful form of persuasion in human history. These aren’t fringe critiques. They’re warnings from the architects of the system.

Toward Algorithmic Literacy

One way forward is through algorithmic literacy: teaching people not just how platforms work, but how they shape what we see and feel. Media theorists, educators, and designers are pushing for this literacy to be as fundamental as reading or math.

Imagine onboarding experiences that tell you: “This app will try to keep you here as long as possible. It will learn what triggers your emotions and feed them back to you.” Some platforms are experimenting with transparency. TikTok now shows why certain videos are recommended (though in vague terms). YouTube offers some user control over watch history. But most algorithms remain black boxes—intentionally so.

Design That Respects Agency

The more radical move is redesign. What if we built platforms that weren’t optimized for engagement but for consent? What if digital tools respected time, attention, mental health? Designers and developers are exploring “humane tech” principles: slow design, friction-by-design, randomized feeds, opt-in discovery instead of infinite scroll. These are not mainstream—but they are growing.

Projects like the “Unalgorithmic Feed” or “Decenter” experiment with non-predictive interfaces. Even indie platforms like BeReal (briefly) flirted with ideas of authenticity over optimization. It’s early. But the seeds are there.

You Can’t Opt Out of the Water

The hardest truth? There is no clean escape. You can’t just delete the app and reclaim your mind. Algorithms are now infrastructural. They shape markets, health systems, hiring, policing, education.

But that doesn’t mean passivity. It means understanding that autonomy is not a one-time choice—it’s a continuous negotiation. And it means demanding accountability not just from platforms, but from the cultural systems that normalize passive consumption of algorithmically curated life.

We are not just users anymore. We are being used.

The question isn’t if we’re being programmed. It’s whether we’re willing to debug the system from within.