The AI you’re using now? That’s a glorified autocomplete with a paintbrush. Slick, fast, and largely obedient. But that’s not where this is heading.

The next wave of creative AI won’t just render your ideas—it’ll react to them. Adapt to your habits. Remix your references. It will argue, evolve, and mutate alongside you. Think less Photoshop, more unpredictable studio partner with a neural twitch and a taste for aesthetic confrontation.

This is the era of adaptive AI—a new class of creative collaborator that doesn’t wait for your commands. It feeds on your style, mirrors your biases, and then proceeds to tear holes in them. You’re not feeding it prompts. You’re having a creative fight club with something built from pattern recognition, machine logic, and a hint of chaos.

And artists are already deep in it.

It’s Not a Tool—It’s a Trickster

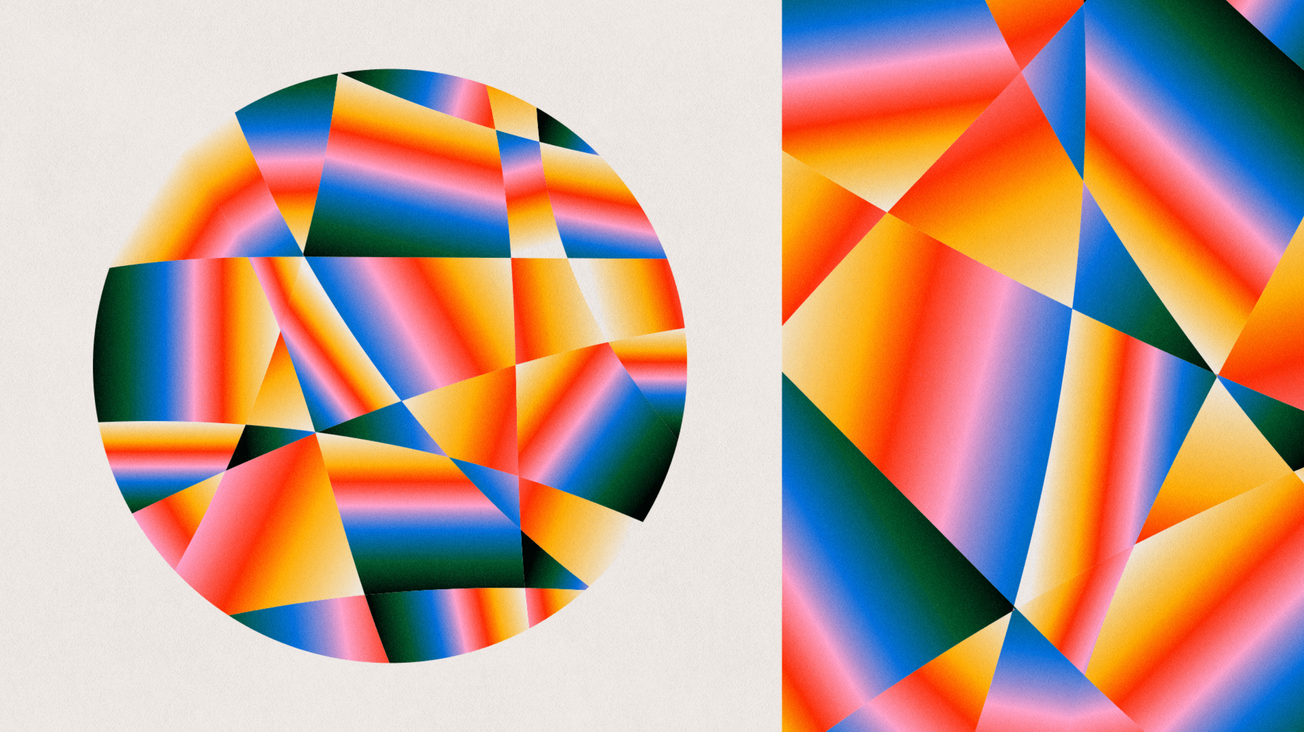

Start with Refik Anadol. In his Unsupervised installation at MoMA, he pointed a generative adversarial network at 200 years of art history metadata. The AI didn’t just reorganize the canon. It hallucinated it. A continuous flow of glitchy, abstracted dreams—part archive, part alien signal—played across the museum wall like MoMA itself was having a machine-induced acid trip.

The audience wasn’t looking at art. They were watching an algorithm learn to think in aesthetics. Anadol calls it “machine dreaming.” But what it really represents is a fundamental shift: the AI isn’t a paintbrush. It’s the studio itself—alive, volatile, and opinionated.

Then there’s Memo Akten, whose work dismantles the idea of AI as a passive image generator. In Learning to See, Akten built an AI system that continuously attempts to interpret a live camera feed through the lens of what it has been trained on—textures like clouds, fire, or flowers. The result isn’t a literal recognition—it’s a generative projection of its learned assumptions, a visual negotiation between perception and expectation, machine and human.

You move your hand. The AI sees a storm. You show it a room. It hallucinates petals. Akten’s system doesn’t render what’s there—it reveals how machine perception invents its own reality. It’s collaboration through tension, a duet of misrecognition and transformation. Akten’s work reminds us: the moment an AI starts seeing differently from you, it’s already thinking with you.

Style is a System—And AI is Learning It

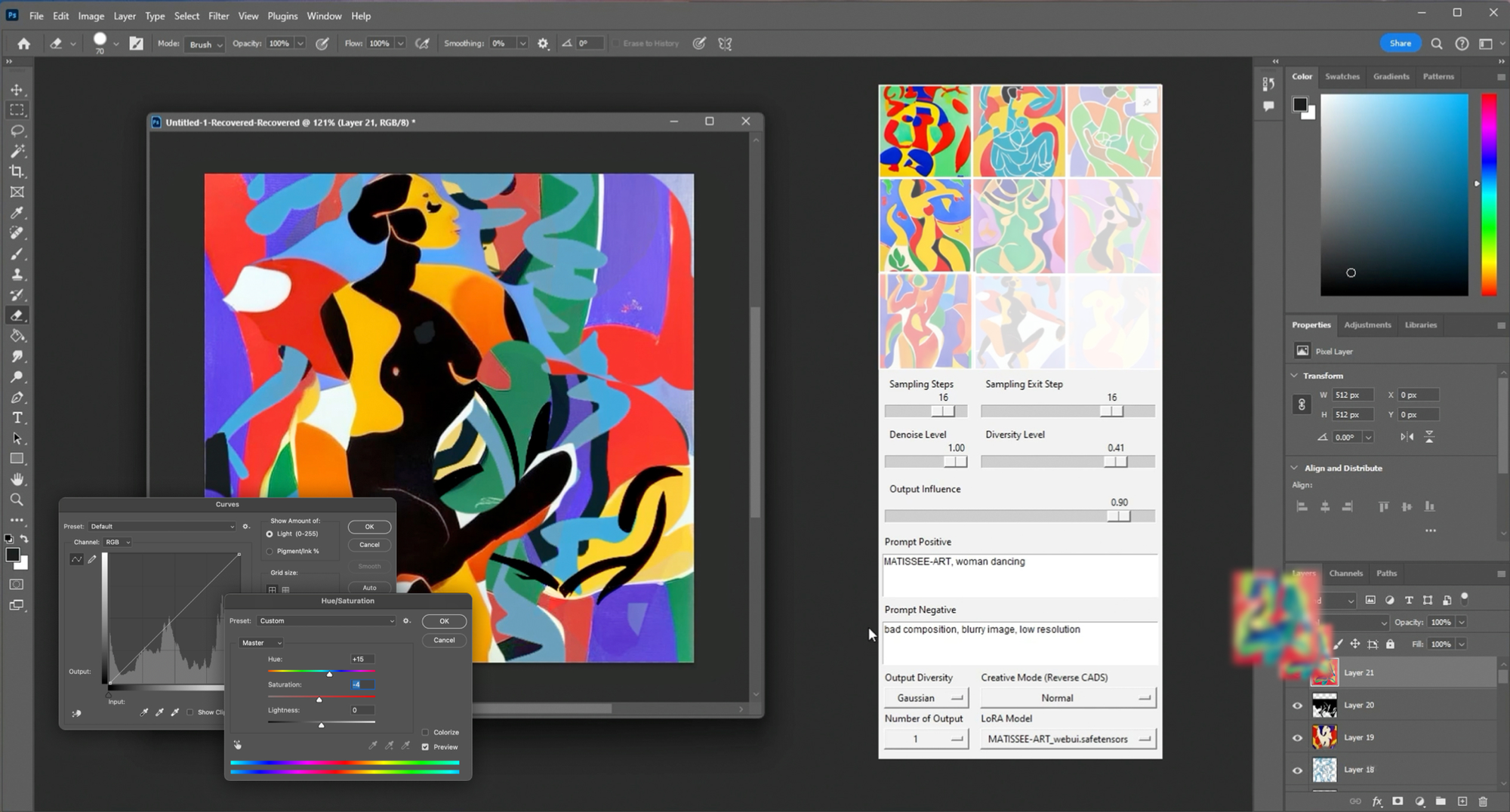

Most generative AI still obeys. You prompt, it performs. But tools like LACE (Language-Augmented Creative Environment) are rewriting that exchange.

LACE is built for image-makers who don’t just want outputs—they want feedback. It lets artists and AI work in parallel, remixing and iterating across layers of influence. The goal isn’t automation. It’s conversation. In trials, creators reported higher satisfaction and stronger authorship when collaborating with LACE.

BUT you’re not prompting a tool. You’re improvising with a system that has aesthetic instincts of its own.

The AI Drawing Partner takes this further. Built to challenge rather than complete your sketches, it engages in what cognitive scientists call "co-creative sense-making." You draw, it responds. You nudge, it resists. The loop isn’t about finishing the picture. It’s about keeping the tension alive.

That tension? It’s the new raw material.

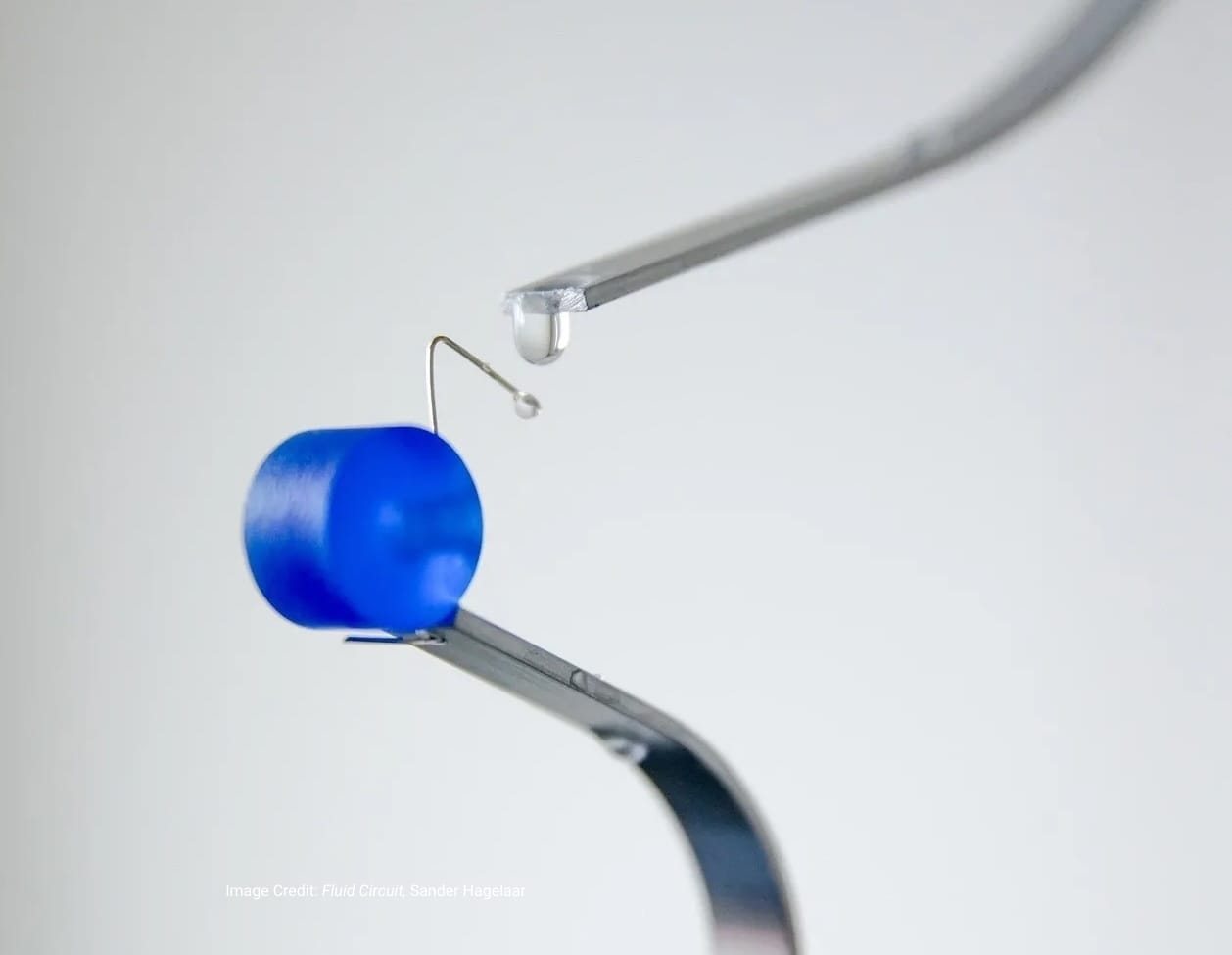

Designing Materials, Thinking in Matter

Outside the screen, AI is evolving into a co-designer of material reality.

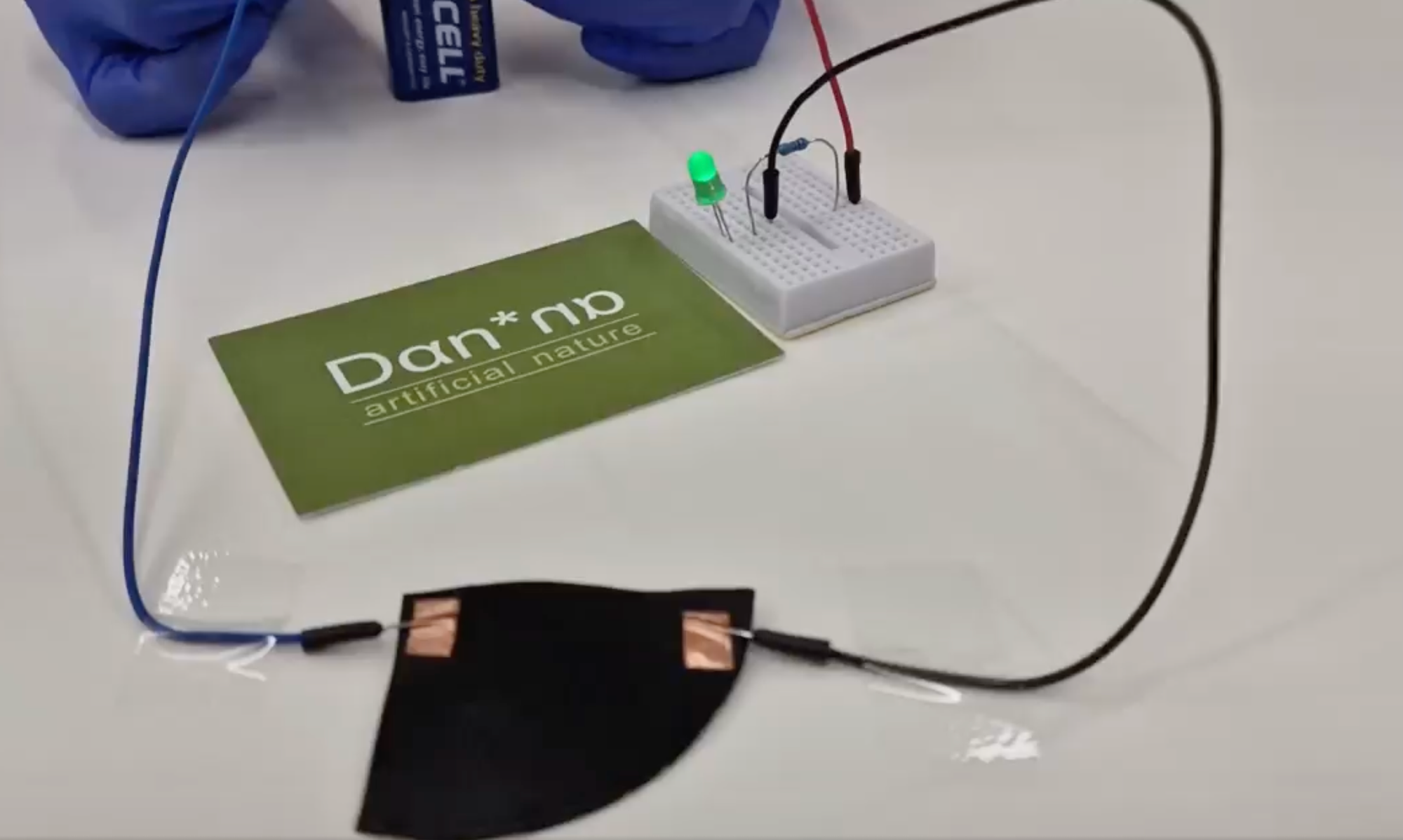

Barcelona-based biotech firm DAN*NA uses machine learning to engineer high-performance bioplastics. Its latest bio-PLA is optimized for flexibility and clarity—ideal for 3D-printed medical devices, sustainable packaging, and even tissue scaffolds. DAN*NA uses AI-driven simulations to create digital 'twins' of target materials, enabling them to predict and fine-tune physical properties without extensive lab trials. AI isn’t just speeding up material R&D—it’s shaping what’s possible at the molecular level.

The now closed, IKEA-backed lab SPACE10 was designing for the planetary scale. In Products of Place, they used AI (including ChatGPT) to scan local waste streams—think seaweed, coconut husks, salmon bones—and suggest viable product applications rooted in regional ecology.

It wasn't just circular design. It was speculative anthropology via synthetic logic.

The Creative Studio Just Got Weirder

This isn’t hype. It’s the beginning of a new authorship model—one where creative identity is co-constructed by unpredictable agents. Where AI doesn’t serve your aesthetic—it messes with it. And that mess? That’s where breakthroughs happen.

The future of creative practice isn’t fully human or fully synthetic. It’s a hybrid domain, shaped by friction, feedback, and shared control. Style will no longer be a button you press. It will be an argument you have. A rhythm you negotiate.

You won’t just make with AI. You’ll think with it. And if you’re lucky, it won’t always agree with you.