In a world humming with invisible computation, the interface is vanishing. You don’t swipe, tap, or click. Instead, the space listens. It responds, adapts, and—most unnervingly—it remembers. Posthuman user experience in adaptive environments is redefining how we engage with technology, as ambient computing and spatial AI blur the boundaries between human and machine interaction.

The Disappearing Interface

First coined in the 1990s by Mark Weiser, ubiquitous computing was a vision of devices receding into the background of daily life. That vision is no longer speculative—it’s operational. Ambient computing systems like Amazon Sidewalk, Apple's HomeKit, and Google Nest now permeate our homes, cars, and bodies. These systems blur the line between hardware and habitat, turning spaces into intelligent agents.

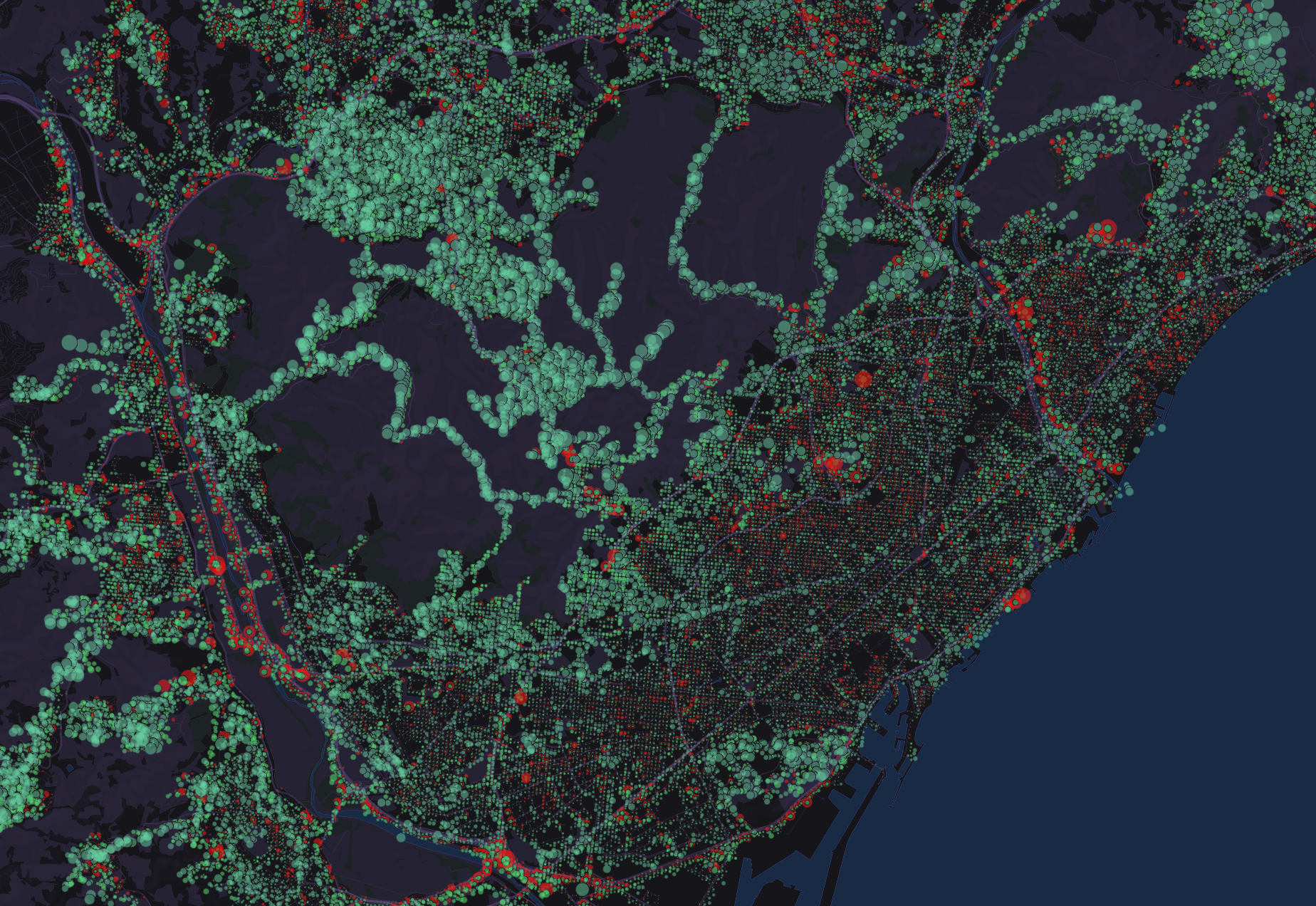

At the heart of this shift lies spatial AI—technologies that interpret, predict, and interact with physical space using real-time data from sensors, cameras, and microphones. It’s the same logic behind autonomous vehicles, augmented reality, and smart retail environments. In these systems, the interface is no longer a screen, but a choreography of behaviors, gestures, gazes, and biometric signals.

The design challenge? You’re not designing a thing anymore—you’re designing context.

Posthuman UX: Where Agency Gets Fuzzy

Traditional UX relies on clearly defined interactions: input leads to output, action to reaction. But in ambient systems, interaction is ambient, probabilistic, and often opaque. You walk into a room, and the temperature adjusts. Lighting shifts. A playlist starts—not because you asked, but because the environment “knows” you. Or thinks it does.

This posthuman UX decouples human intention from control. Interaction becomes inference. Autonomy is redistributed—not just to the user, but to the space itself. The machine doesn’t wait for you to act. It anticipates, interprets, and sometimes decides. It breathes back. And herein lies the critique: as environments become more predictive, are they also becoming more prescriptive?

Surveillance by Design

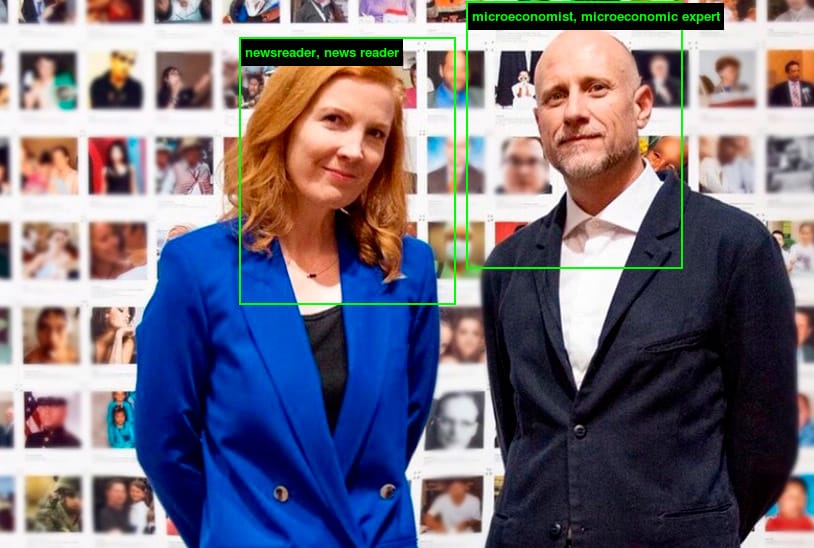

Take facial recognition-enabled retail displays that change product recommendations based on your perceived age or mood. Or office environments that use computer vision to track “collaboration patterns.” These are not hypothetical scenarios; they are real deployments.

Ambient systems often operate in the liminal space between surveillance and service. This makes consent murky and privacy porous. Unlike a traditional app, you can't just "log out" of a smart room. Your very presence becomes a data point.

Designing for ambient environments thus raises a moral imperative: should responsiveness always be the goal? Or should design introduce friction—deliberate slowness, moments of pause, even refusal?

From UX to EX: The Experience Layer

As spaces grow sentient, user experience shifts toward experience architecture. Designers now operate more like directors of multisensory theatre than app developers. The metrics change. Engagement isn't about time spent or buttons clicked, but about emotional tone, behavioral flow, and environmental resonance.

Companies like LG and Samsung are investing in "experience homes" where AI-driven spatial responses are tested on high-net-worth beta users. Meanwhile, startups like Ori Living are integrating robotics into architecture—automated walls, shifting furniture, modular space design—all programmed via ambient triggers. This signals a future where environments don’t just contain experiences—they curate them.

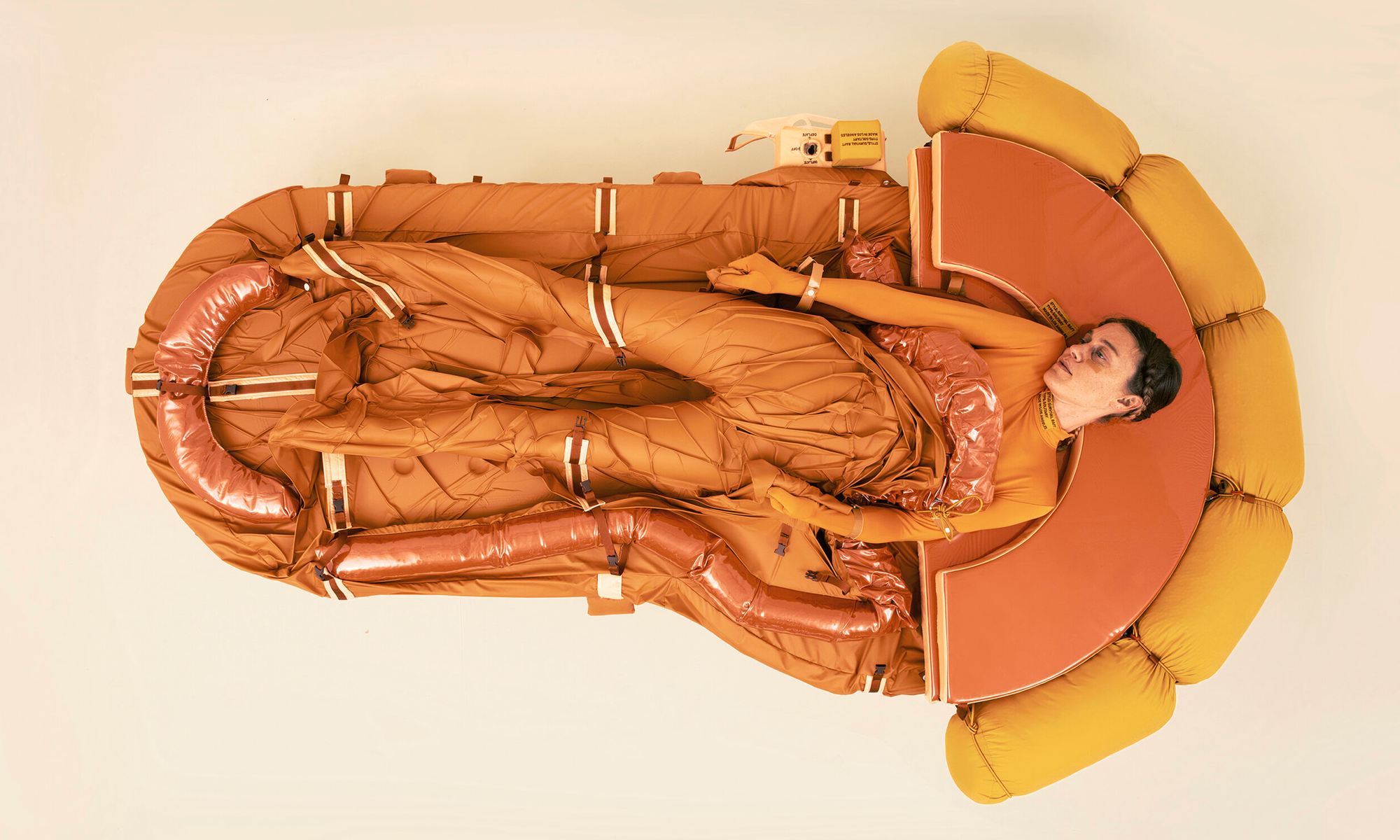

Designers like Lucy McRae, known for her speculative “body architecture,” explore how future environments might physically adapt to the psychological states of their occupants—melding biotech, responsive architecture, and affective computing.

Liam Young, an architect and filmmaker, creates immersive worlds that use narrative, sound, and AI-driven spatial design to explore how adaptive environments might structure not just movement, but thought itself.

These practitioners push spatial intelligence into territory where UX meets narrative systems, sensory design, and posthuman ethics—creating environments that are both responsive and critically reflective.

The Creative Opportunity: Hacking the Habitat

For creatives working at the intersection of code and culture, adaptive environments present both a canvas and a critique. Artists like Refik Anadol use machine learning to transform spatial memory into immersive installations, pushing ambient tech into the realm of the sublime. Meanwhile, critical designers are prototyping “disobedient” smart objects—like furniture that resists surveillance or environments that require emotional consent.

Rael San Fratello, an architecture studio led by Ronald Rael and Virginia San Fratello, uses advanced fabrication, robotics, and spatial computing to explore contested spaces and challenge dominant narratives of technology. Their work—like Border Wall Teeter-Totter and 3D-printed earth structures—repositions adaptive systems as tools for resistance, empathy, and civic critique. In their hands, ambient tech becomes a way to reclaim space, not just automate it.

There’s an urgent need for design languages that interrupt, not just anticipate. For UX frameworks that include not just efficiency, but ethics. And for spatial systems that don’t just adapt to users—but allow users to adapt them back.

What Happens When the Environment Has Intent?

In adaptive systems, intentionality shifts. You might walk toward a door, and it opens before your hand touches the knob. But what happens when that gesture is misread? When your stillness signals indifference to one system and agitation to another?

This is the crux of posthuman UX: interaction is no longer reciprocal, but emergent. It's not just about what you do—but what the system thinks you’re doing. That means errors become existential. Misinterpretation isn’t just a bug—it’s a breach of presence.

Rethinking Metrics and Models

If the interface is ambient, then traditional UX metrics collapse. Click-through rates, heat maps, and A/B testing give way to environmental sensing, predictive modeling, and behavioral inference. The ethical question becomes: what are you optimizing for?

In a future where environments can “nudge” behavior, the temptation to design for influence—not empowerment—is strong. Spatial AI could guide people toward health, sustainability, or civic engagement. But it could also steer them toward profit, bias, or compliance.

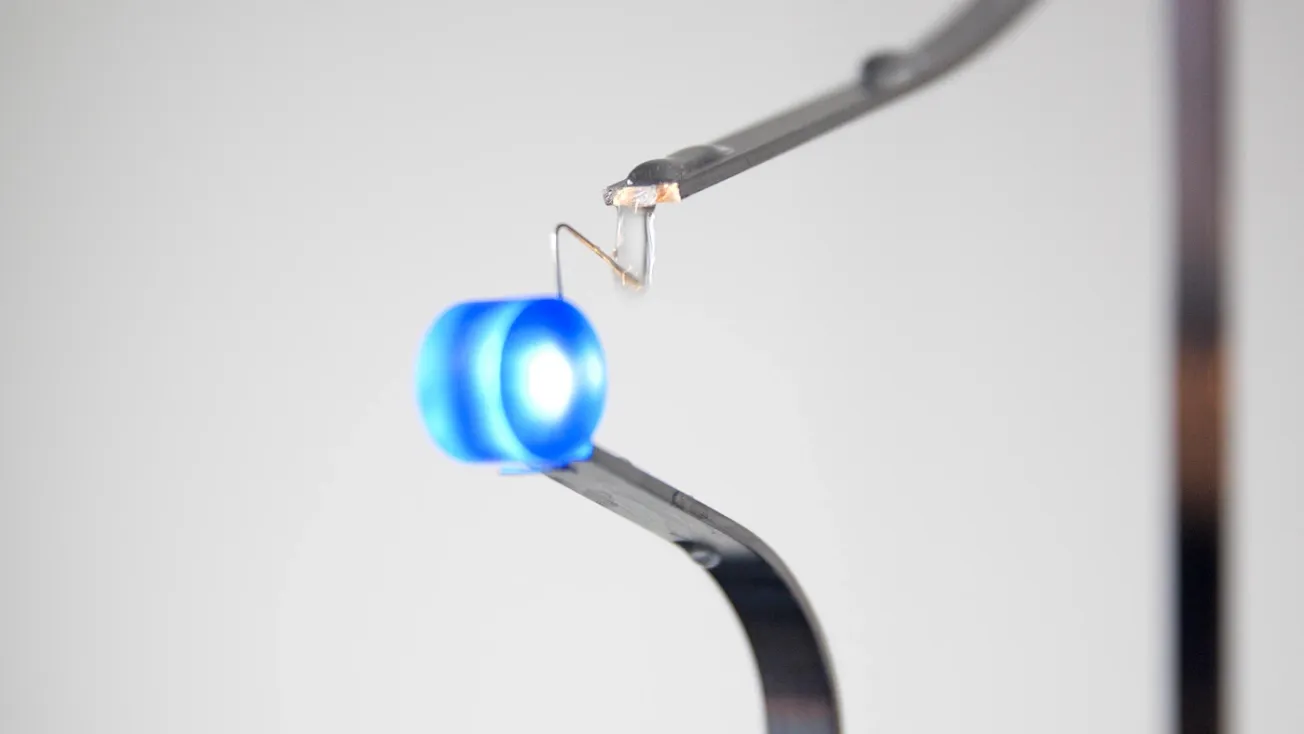

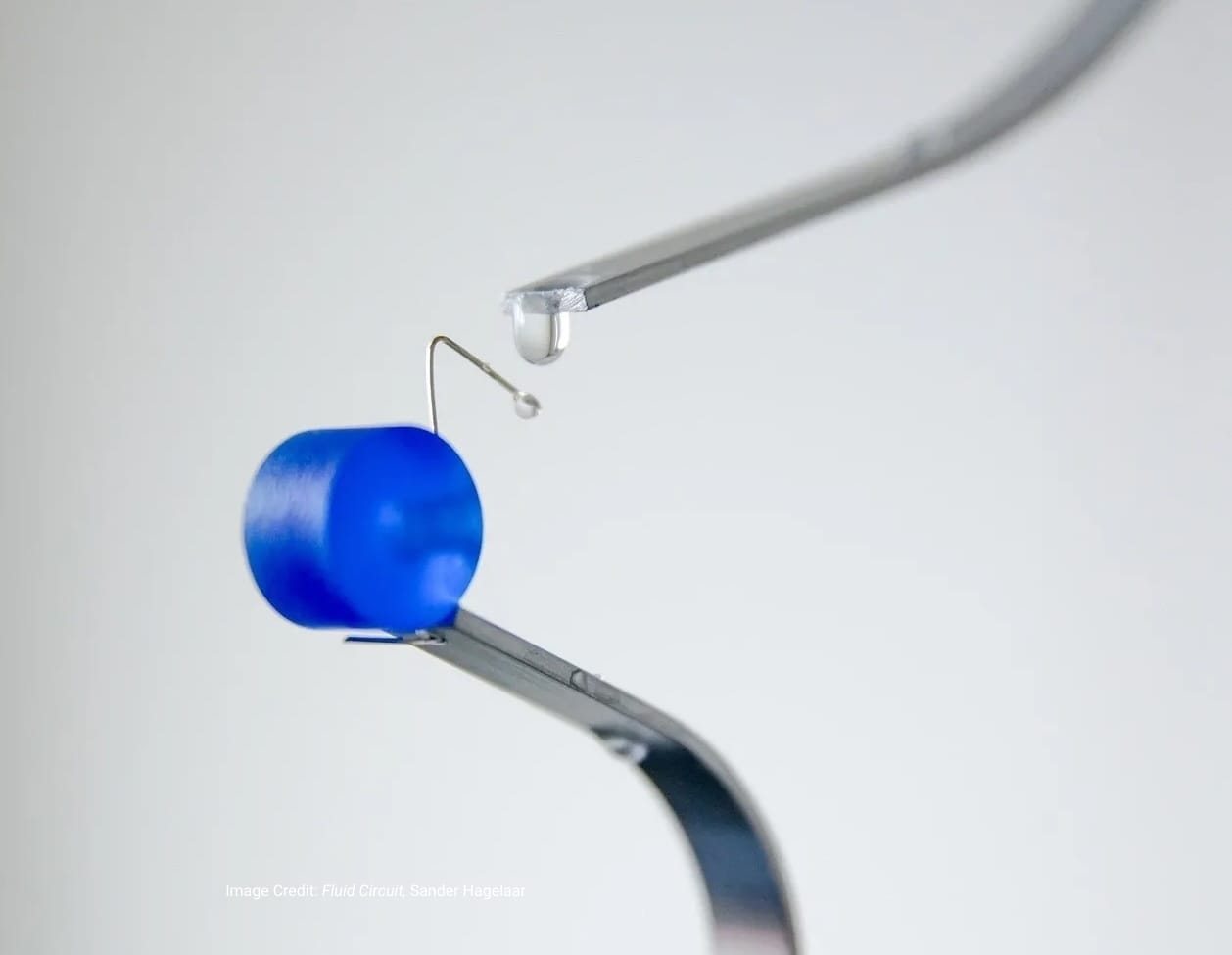

At the Morphing Matter Lab at Carnegie Mellon, researchers are developing materials that sense and respond to ambient conditions—hinting at new ways to measure engagement not through user input, but through material adaptation, spatial shifts, and environmental feedback. These systems don’t just track interaction—they embody it. In their Morphing Circuits project, flat circuit boards transform autonomously into 3D forms, demonstrating how interactivity can be encoded directly into the physical structure, where material behavior becomes both the interface and the metric.

To safeguard the future of UX, we must re-center human complexity in the design of non-human systems.

Designing With, Not For

Ambient computing and spatial AI mark a radical shift in how we think about technology—not as tools, but as inhabitants of shared space. This demands a rethinking of UX—one that acknowledges both the promise and peril of disappearing interfaces.

The posthuman user isn’t just a person; it’s a participant in a network of decisions, signals, and adaptations. In this new paradigm, we don't just design systems—we live inside them.

The machine breathes back. The question is: do we still recognize ourselves in the reflection?