The next generation of tools for artists and designers isn’t just smarter. It’s adaptive.Forget static software suites and passive fabrication machines. At the intersection of digital fabrication, biomaterials, AI, machine learning, and immersive environments, the creative process itself is evolving. Tools are becoming collaborators. Materials are responding. Studios are transforming into systems. In five to ten years, making art may be less about controlling a tool and more about working in feedback loops—with autonomous systems and living matter shaping outcomes alongside the artist.

AI That Negotiates, Not Just Generates

Generative AI today produces fast visuals. What’s coming will go further: adaptive systems that learn your style, challenge your assumptions, remix your references, and propose unexpected variations. Think of it less like using software and more like managing a conversation with a system that critiques your choices. Stephanie Dinkins, whose work with AI and socially engaged practice interrogates the politics of algorithms and co-authorship, is already pushing these boundaries. Her project Not the Only One features a multigenerational AI trained on oral histories from her own family, surfacing questions of race, memory, and representation in machine learning. Dinkins challenges the default assumptions encoded into AI systems, reframing them as cultural artifacts that must be critically shaped—not just technically optimized. The next wave of tools may act more like collaborators than instruments—part pattern-recognition engine, part critical interlocutor.

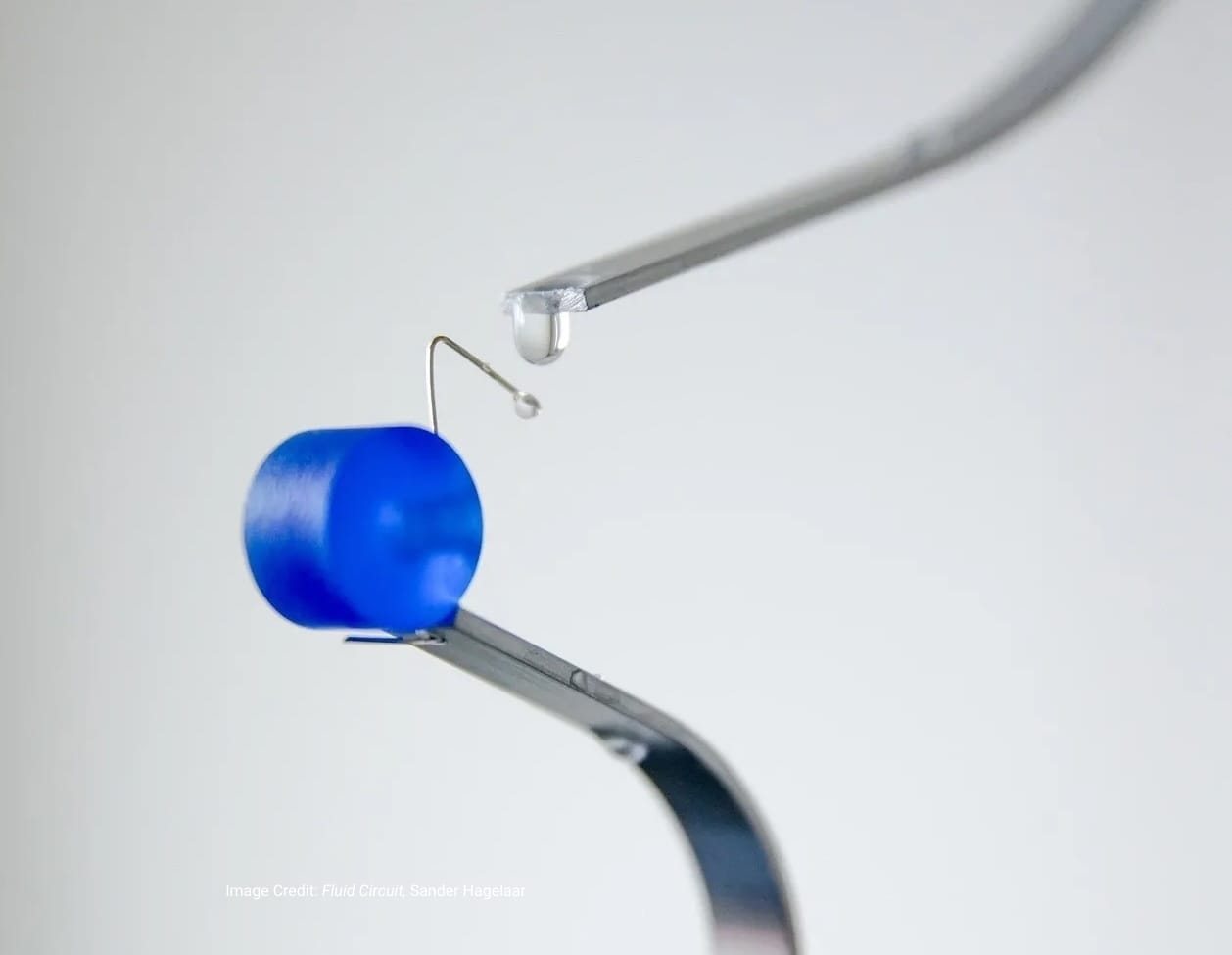

Biomaterials That Grow Themselves

The physical materials of design are transforming. Researchers at MIT’s Self-Assembly Lab are developing responsive materials and programmable matter that adapt to their environment. Their work includes 4D printing—where printed structures change shape over time in response to moisture, temperature, or light—and inflatables that self-construct under specific conditions. The lab’s approach blends design, engineering, and natural systems, producing structures that are both efficient and reactive. Companies like Faber Futures and Biofabricate are also leading innovation in biofabrication—from bacterial dyes to lab-grown textiles.

Artists and designers may soon grow their own raw materials: cultivating fungi for structural forms, coaxing bacteria into patterning fabric, or printing organic pigments that shift based on environmental input. Studios will monitor these processes like ecosystems—tracking pH, humidity, and growth rates alongside cost and color. In this model, sourcing material becomes more like agricultural design than industrial supply chain logistics.

Immersive Studios Replace Workstations

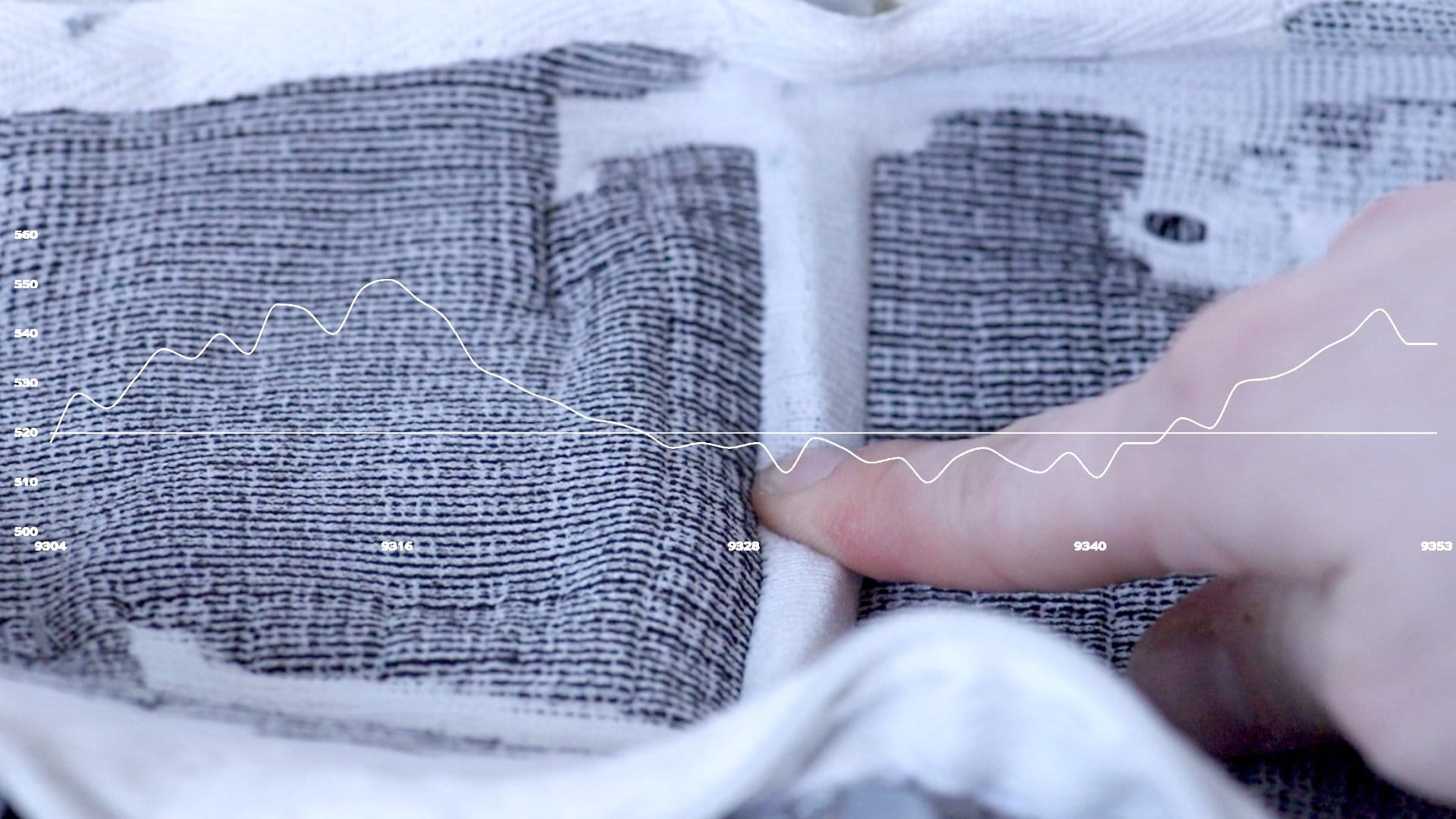

The creative workspace is also on the brink of a major overhaul. Instead of desks and screens, artists will work in immersive environments: shaping spatial sound, manipulating volumetric visuals, and designing with gesture or voice input.

Keiichi Matsuda’s mixed-reality work, which blends architectural environments with responsive overlays, offers a glimpse of this shift. As spatial computing matures, artists will no longer look at their work on screens—they’ll step inside it. With haptic gloves, lightweight XR headsets, and ambient sensors, physical and digital fabrication will merge into a continuous process. Instead of modeling on a screen and printing later, form and feedback will happen simultaneously. As creative AI matures, technical knowledge of scripting languages and complex toolsets will become less of a barrier. Artists won’t need to write code or navigate complex interfaces. No-code and low-code platforms will allow for natural language prompts and gesture-based commands to generate detailed outputs. This doesn't mean complexity disappears—it gets buried deeper into intuitive, layered interfaces that prioritize creative momentum. Mastery will shift from syntax to conceptual clarity.

What the Future Studio Looks Like

It’s already being prototyped. Picture:

- A Living Lab where mycelium printers and adaptive AI modeling stations generate self-repairing surfaces.

- An Immersive Sandbox where gesture and eye-tracking shape architecture on the fly.

- A No-Code Atelier where your voice and sketchpad drive robotic arms and fabrication workflows.

- A Multisensory Fabrication Suite where smell, vibration, and temperature are considered core components of every output.

- A Microfactory that fabricates, self-maintains, and logs its own usage data—ready to scale or evolve as needed.

In the future studio, materials won't just be visual or structural—they’ll be multisensory. Sculptures may emit scent. Textiles and architectural surfaces will exhibit complex responsive behaviors. Sound and haptics will be as integral as color and form. The concept of “material” will expand to include vibration, bioelectric response, and light modulation—blurring lines between object, experience, and interface.This isn’t science fiction, nor is it speculative fantasy. It’s the early edge of an infrastructure shift already underway—in university labs, design studios, and experimental startups around the world. The creative future isn’t about adding more tools. It’s about building systems that work with you—and occasionally against you—to push what creativity can be.